End-To-End Data Engineering Project in 40 Minutes | AWS Cloud | PySpark

ฝัง

- เผยแพร่เมื่อ 31 ธ.ค. 2023

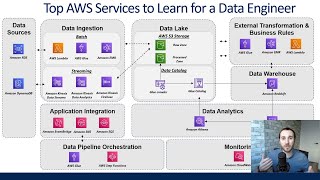

- Explore the world of AWS Data Engineering with this project. In this playlist we will leverage services like S3, Athena, Glue, Quicksight and many more services.

Stay Tuned. Like, Subscribe and Support.

Kaagle Link : shorturl.at/qBUX5

Processed Data : drive.google.com/drive/folder...

.

.

Project Series : • DE Projects

Snowflake : • Snowflake Tutorial

.

.

#dataengineer #project #aws #glue #awss3 #learnbydoing

![THE TOYS x NONT TANONT - ดอกไม้ที่รอฝน (spring) [Official MV]](http://i.ytimg.com/vi/pPa1d5cC8M4/mqdefault.jpg)

![ตีสิบเดย์ [FULL] | คู่รักแซฟฟิก "หลิงหลิง - ออม" จากซีรีย์ "ใจซ่อนรัก"](http://i.ytimg.com/vi/zs8AfphGn2w/mqdefault.jpg)

used aws glue under free teir cost a some amount after i month which i used because i was unaware of the extra charges..so i request u please provide a commet while making the video that which services can cost money and which services can be used under the free teir , that will be very helpfull for newbies like me...

Great project for beginners!!

Just watched 1 video, u gained a subscriber 🎉. Hope more from u😊

Amazing content... This is first AWS DE video I watched in practical and I am glad I found this video.

Thank You

Can you please share some automated way of doing ingestion process in s3 staging folder and some preprocessing demo followed up by some SCD Type 2 implementation on glue?

thats what i was looking for. thank you :)

also, you should create a playlist with all data engineering projects you already done, gonna be easy to find :)

good job dude!

That was really good to follow...100% worked and I learned so much more in 40min😀😃

i got a bill of 2.80 dollars by just running glue etl once...I dont know how im gonna create more projects if they keep billing like thiss..i cant afford fee rn...? what can i do?

Good please again one end to end aws-data project video

Great video, can you please explain the preprocessing part, what exactly did you use to preprocess the datasets, was it a python script in pandas or something else?

how did you preprocess data, what all you removed or changed while preprocessing the data

@datewithdata123

when I am running glue job it's successful but ouput files are not created in s3. Did you or anyone face similar issue?

in real time do we have to perform these task regarding IAM, etc or do we have to jst run terraform scripts or something similar and our architecture or cluster spins up? can you clear this real time working process?

I dont know why i am not able to see the output in datawarehouse, but i can see 100% success rate in job monitoring window. Could you tell me what will be the problem in this???

can anyone tell how to showcase the project in github or put it in resume????

Nice one bhai, very precise and clear explanation

Glad you like it

May I have your mail id please?

datewithdata1@gmail.com

plz also tell how to push these kind of projects on GITHUB

OH GOD! AWS UI always made me overwhelmed and scared me....But you just explained everything so beautifully...Thankyou soo much mann.....I finally feel confident that i can learn AWS and build awesome projects...

BTW will AWS charge us for using ATHENA and GLUE as they don't come under free trial...?

Yes.

For completing this project the bill will be less than half a dollar(if you don’t run a glue job a lot).

sit please attach

preprocessing of csv file code

Would you know why the 'Data preview' on joins may not populate any data aka 'No data to display'? I did a sanity check and the albums and artists files (in excel) , do indeed have matching data in the artist_id (album) to id (artist).

But when I join on those conditions, as you did, it doesn't populate any data. Just to see, I tried right and left join, and that actually populated data for each respective side (oddly enough).

Seems like a glitch, but because the script it simple and the join script looks correct. Do you know if the data types are converted or something else occurs behind the scenes when you join in Visual ETL?

I basically can't do the project because the subsequent nodes require data being fed from previous nodes. But there's no data at the first join (album/artist). Really odd.

Please check you have your data in s3.

Yes we do have the data in s3 but the same issue is also popup for me as well

could you please help me after sucessfully running Glue pipline data s not stored in final s3 bucket

Please share your error SC at datewithdata1@gmail.com

at time of trasforming enable to join table on condtion data is not fetching at column? is anybody help me

Same issue with me

can you do on s3,glue,emr,lambda,athena,redshift

Ongoing. Will be released soon

Question please. 26min:38sec timestamp - you mentioned that the job created multiple blocks. Why are there multiple blocks? Thank you!

We have created two worker nodes and since we have very little data. we could see that there were exactly 2 files in our warehouse table.

@@datewithdata123 Thank you!

Great video, can you let us know what did you use for preprocessing, was it a python script in pandas or something else?

can u please help y crawler is not running, it is asking some permission ,which permission we need to add

While joining the tables in visual etl, i could not add the condition as i could not look for colum names it is not showing me any columns

Solved?

Refresh it multiple times. it happened with me too

This may happen sometimes when you have slow internet connection. Bcz glue will read the schema from data present in S3. Hence the connection need to be set.

@@vichitravirdwivedi I already did it multiple times but no output

try to use infer schema, all of the fields popped up for me after doing that. @@himanshusaini011

In AWS glue when I am creating pipeline in transform join I am not getting option to select any source key can u plzz help

I used infer schema, and that seemed to fix the problem for me :)

@@FredRohnThank you so much,it works for me

I'm unable to the trackid from the join album and artist. What might be the reason

same

hey how u resolved this issue?

use infer schema, that fixed the problem for me@@KomalChavan-ht7wm

try infer schema, that made it work for me

Can you provide your github link for preprocessing data.

Sorry didn’t save the code. We have used visual etl so the code was auto generated.

Is S3 a data warehouse or data lake?

S3 is neither a warehouse nor a data lake; it's an object storage service provided by AWS, but can be used as both because it can manage large volumes of structured and unstructured data for analytics, processing, and other purposes.

hello bro, the services you are used in this project are comes in free tier right ? or we have to pay

Some of the services are not under free tier.

For completing this project the bill will be less than half a dollar(if you don’t run a glue job a lot).

@@datewithdata123 i got 2.80 dollar bill just after running etl once in glue

When i am trying to save visual etl job it is showing me error as create job:access denied exception

What is the policy we have to add in root account?

iam:PassRole

I am unable to find that policy in root account

Please help me

Or provide iam full access.

did you solve this issue? I am experiencing the same thing. @@udaykirankankanala3635

how do i do this? I'm having a similar issue@@datewithdata123

Crawler will not run with just s3 full access as shown here right?

You may need to add IAM:Full Access if you are working as an IAMUser

@@datewithdata123 I have added IAM:Full Access also within the role glue_access_s3 but again failed to run crawler.

@@sidharthv1060I think you need add AWSGlue service role

@@sidharthv1060 I am also facing the same issue repeatedly, even after providing all the required access.

@@supriya9047same

Hello can you please update the Processed Data Link please.

drive.google.com/drive/folders/1PgZQDvw5GnvVQuhV7-MtxIZHnLsZA-Zs?usp=drive_link

@@datewithdata123 thanks! (Y)

iam:PassRole error when trying to attach the role to the project. iam:PassRole looks very confusing, but I'm not sure why no one else is encountering this issue.

User: arn:aws:iam::905418287400:user/proj is not authorized to perform: iam:PassRole on resource: arn:aws:iam::905418287400:role/glue_access_s3 because no identity-based policy allows the iam:PassRole action

In the beginning while creating IAM user, plz add IAMFullAccess.

This is happening because the "iam:PassRole" action is required when a service like AWS Glue needs to pass a role to another AWS service.

@datewithdata123 OK, I will try that. I tried multiple solutions with regards to creating a new policy and attaching it to the user, but no luck. Hope that works. 🙏

@@datewithdata123 Yep, that worked. Thanks for that.

@@datewithdata123 I believe that change has affected the way joins are occurring. before i was able to join the album & artist join w/ the tracks. but now the ablum & artist join doesn't populate any data. it looks like people have similar issue when i google, but no solutions provide online. are you aware?

please upload the Glue script

Sorry didn’t save the code. We have used visual etl so the code was auto generated.

is it free ?

Yes