Physical Systems That Learn on Their Own with Andrea Liu

ฝัง

- เผยแพร่เมื่อ 11 ม.ค. 2024

- Public Physics Talk "Physical Systems That Learn on Their Own" with Dr. Andrea Liu from the University of Pennsylvania, originally recorded on Wednesday, January 10, 2024 at the Aspen Center for Physics.

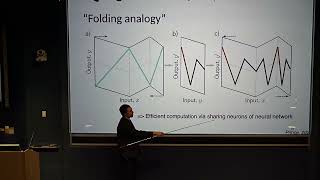

Artificial neural networks are everywhere now because they are so useful. They do everything from predicting the weather to writing essays and translating them into Spanish. Their abilities have exploded during the last dozen years because they have grown far bigger. Unfortunately, though, bigger neural networks require more energy to train and run. Our brains use only about a fifth of the calories we eat to learn and perform a far greater variety of tasks. How does the network of neurons that comprises the brain manage to do all this at low energy cost? The answer is that it doesn’t use a computer-it learns on its own. Neurons update their connections without knowing what all the other neurons are doing. We have developed an approach to learning that shares this key property but is far simpler than the brain's. Our approach exploits physics to learn and perform tasks for us. Using this approach, we have built physical systems that learn and perform machine learning on their own. Our work establishes a new paradigm for scalable learning.

Andrea Liu is a theoretical soft and living matter physicist. She was a faculty member in the Department of Chemistry and Biochemistry at UCLA for ten years before joining the Department of Physics and Astronomy at the University of Pennsylvania in 2004, where she is the Hepburn Professor of Physics and Director of the Center for Soft and Living Matter. She is a fellow of the American Physical Society (APS), American Association for the Advancement of Science (AAAS) and the American Academy of Arts and Sciences, and a member of the National Academy of Sciences (NAS). Liu is currently a Councilor of the AAAS and NAS as well as a member of the Committee for Human Rights of the National Academies. - วิทยาศาสตร์และเทคโนโลยี

great presentation, thanks

In the electric circuit model described by Professor Andrea Liu, "clamping" is accomplished by applying specific voltage conditions to the circuit to create a controlled environment for the experiment. Let's break down how this is done:

Dual Network Setup: The system consists of two identical electrical networks (twin networks), each made of variable resistors (representing the blood vessels in the brain vasculature model).

Applying Voltage Conditions:

In one network (the 'free' network), a voltage source is applied at one end (simulating the blood entering the brain), but the other end is left free, meaning the output is not controlled or predetermined.

In the other network (the 'clamped' network), the same voltage source is applied at one end, but at the other end, a specific desired voltage is also applied. This "clamps" the output to a specific value, creating a target or desired state for the experiment.

Operation:

Each network operates independently, with the voltage drops across corresponding resistors in each network being compared.

The resistances in the networks are then adjusted based on the differences in voltage drops between the free and clamped networks. This adjustment process is designed to minimize the difference between the actual output of the free network and the desired output (as set in the clamped network).

Learning and Adapting: By repeatedly adjusting the resistances based on the differences between the free and clamped states, the network "learns" to produce the desired output when given a specific input. This mimics the learning process in biological systems, like adjusting blood flow in brain vasculature.

Conservation Law Compliance: A key aspect of this system is that it automatically complies with the conservation laws (like Kirchhoff's laws in electrical circuits) during the learning process. This compliance is inherent to the physical nature of the circuit and does not require computational resources to enforce.

In summary, "clamping" in the electric circuit model is achieved by setting a target or desired state in one of the twin networks and then adjusting the system to meet this state, mimicking a learning process. This method allows the system to adapt and learn based on the differences between the uncontrolled (free) and controlled (clamped) states.

I suspect non-linear networks are less prone to catastrophic forgetting because the activation function works as a gating mechanism to prevent overwrite. Furthermore, once circuits scale up / the number of trainable parameters increases, the network self-wires sub-circuits / subroutines, which become further immune from overwriting due to increasingly specific "access keys" (due to higher dimensional space) used to access and/or overwrite those established sub-circuits.

I wonder how difficult it would be to implement these circuits on Field-Programmable Analog Array (FPAA), the analog equivalent to an FPGA. It consists of a matrix of analog components (like op-amps, capacitors, and resistors) that can be configured to form different analog circuits. GPT4 can probably help with writing VHDL code to set them up.

"promo sm"

Absolute nonsense as expected.

Wipe-Worthy!!!

She is an elected member of the National Academy of Sciences. And who are you?