Spark memory allocation and reading large files| Spark Interview Questions

ฝัง

- เผยแพร่เมื่อ 5 ก.พ. 2025

- Hi Friends,

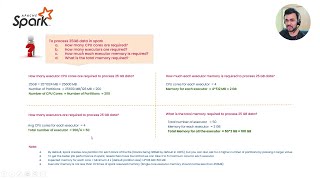

In this video, I have explained the Spark memory allocation and how a 1 tb file will be processed by Spark.

Please subscribe to my channel for more interesting learnings.

I have seen several videos, You are the best. Appreciate your efforts.

Thanks a lot, San.

Thank you mam! Concept is clear, I think that is how spark is doing efficienct pipelining

Yes, thank you.

Thanks for the wonderful vedio

Thanks a lot

Crystal clear explanation

@@RajKumar-zw7vt Thank you 😊

Nice explanation, very useful

Thanks a lot 😊 👍

Nice explanation 👌

Thanks a lot 😊 👍

Good explanation.. I've one doubt. How did you calculate no of blocks for 1TB file?

In the video are you saying 84 Lakhs blocks if yes then how to calculate this number?

Thank you. Consider each hadoop block size as 128MB.

1TB = 1000000 MB

128MB = 1 block then how many blocks we need for 1 TB?

1000000 /128 = 7813 blocks.

@@sravanalakshmipisupati6533 1000000/128 = 7812 but in video you're saying something else that's why I asked.

yes, that could be a human error

But what if we have to perform group by operation or join then we should have all data in ram for processing right?

In Apache Spark, the processing of large datasets involves dividing the data into partitions, and not all the data needs to be stored in memory at once. Spark processes data in a distributed and parallelized manner, and it uses a concept called Resilient Distributed Datasets (RDDs) or DataFrames (in the case of Spark SQL) to represent distributed collections of data.

When you perform a join or a group by operation in Spark, the data is processed in a distributed fashion across the available nodes in the cluster, and each node works on its partition of the data. The data is processed in a pipeline fashion, with data being passed between stages of computation.

👍👍

Thanks for this video. i have one question If I have 500 GB data then in order to process it what will be my ideal cluster

configuration?

Thank you. Whatever is the file size, the entire file will not be loaded into the memory. So how quickly you want to process the entire data, based on that you can extend the memory.

If we give less memory the job takes long is what I understand. But then why are jobs failing with out of memory?

@@tolava123we need to assign proper memory for overhead, else job fails with oom issues.

Hi , A great explanation no doubt, can you pls tell me per machine how many executors will be there?

Thank you. That is based on the available cluster memory.

off heap memory and overhead memory are same?

off-heap memory is a specific memory region used for data storage, separate from the Java heap, while overhead memory is a broader term encompassing all memory usage for system operations, including on-heap memory used for managing the system's internal processes. Both off-heap and overhead memory management are important for optimizing the performance and stability in Spark.

@@sravanalakshmipisupati6533 got it, Thanks akka.

Wonderful 👌👌.. you've gained one more subscriber 😊... I've a very simple question for you --- what is disk here in spark? Is it driver disk or the hdfs disk ? In persist operation, we have disk and memory option. I understood that memory is executer memory but what is this disk 🙄? Could you please assist....Also, there is one more concept is data spilling to disk , i m badly confused with this disk😭

Thanks a lot.

Disk means, the computer memory (not RAM). This is the HDD of that particular node. Driver/ Worker nodes are nothing but other computers. Like any other computer, it also will have RAM, HDD, CPU cores etc. So once the worker's RAM is fully occupied then it will spill the rest of the data into Disk which is the computer memory, HDD.

Imagine this entire cluster as a group of computers. Once main computer as Driver and rest of the small sized ( memory-wise) computers as workers. So each computer will have some RAM ( 4GB, 8 GB etc. ) so once this is full, the data will be sent to their respective Disks(HDD) . If the data is sent to disk once RAM is full, then this is called data spilling. Spilling means something falling down or out of the flow.

Take any container as RAM and once the container is full, the contents will come out. means, the content is spilled here. Similar meaning for data. It will spill over to disk once RAM is full. Hope you got the idea.

@@sravanalakshmipisupati6533 lovely 👌👌. Is there anyway to calculate the size of HDD like we can calculate the size of ram similarly if there is any way to do that in case of HDD ? Also, what will happen if the disk is also not capable to handle the RAM spilled data? OOM?

@@gyan_chakra Its the computer memory, we can't calculate that. What RDD does is, it will process the data partition by partition so in most of the cases disk spilling wont happen. If any particular case of spilling, we need to see the data skewness and consider repartitioning.

@@sravanalakshmipisupati6533 thank you ❤️

I like the explanation. So, instead of 84 Lakh blocks, you suppose to say 8192 blocks. right?

20 Executor-machine

5 Core processor in each executor node (FYI: cores come in pairs: 2,4,6,8, and so on)

6 GB RAM in each executor node

128 MB default block size

And the cluster can perform (20*5=100) Task parallel at a time. here tasks mean block so 100 blocks can be processed parallelly at a time.

100*128MB = 12800 MB / 1024GB = 12.5 GB (So, 12GB data will get processed in 1st set of a batch)

Since the RAM size is 6GB in each executor. (20 executor x 6GB RAM =120GB Total RAM) So, at a time 12GB of RAM will occupy in a cluster (20node/12gb=1.6GB RAM In each executor). Now, Available RAM in each executor will be (6GB - 1.6GB = 4.4GB) RAM which will be reserved for other users' jobs and programs.

So, 1TB = 1024 GB / 12GB = (Whole data will get processed in around 85 batches).

In the calculation I've used for understanding purposes, actual values may differ in comparison with real-time scenarios.

Please feel free to comment & correct me, if I'm doing anything wrong, thanks!

Thank you. Yes, the calculation is correct.

How do you know - at a time 12 GB of RAM will occupy in a cluster ??

@@vaibhavverma1340 It won't occupy the total memory at a time. Based on the available executor memory, data will be read partition by partition.

@@sravanalakshmipisupati6533replication factor is 3.

If 1 TB of data 128mb block size. Approximately 8000 blocks. But if we consider replication factor 8000*3 = 24000.

So total we need to process 24000 partitions right ?

@@praneethchiluka9602 You need to have 8000 partitions for processing.

Can u help to understand how does spark decides----

How many tasks will run parallely for ex for 1 TB of file?

I am aware that no.of.tasks depends on no.of.cpu core assigned to executor. But how the calculation flows ..?

Its a straight forward calculation. Based on the number of task, no. jobs will be created and assigned to the executors. The remaining jobs will wait until any executor is free. And then the second set of the tasks will be distributed to the free executors. Even if its 1 TB file, the no of tasks depends on the code, how we are processing the file. Based on the code tasks/ jobs will be created.

100

Thank you

How to find why executor memory is growing gradually. The spark is installed on kubernetes and Driver memory is 4G and executor memory is 3G + 1G (overhead). Now How to check which memory area is growing more like execution or storage or why memory is growing. As after it reaches 99% executors are killed and there are no logs to check. Could you please suggest some pointers?

Is there a UDF?

Or sometimes when some calculation is happening but it went into some loop then GC will keep accumulated and it will cause the memory issues. Try to breakdown the job into smaller parts and try pointing where exactly the pointer is stuck. If this kind of issues are resolved, memory issues will be resolved

How 1TB is equals to 84 lakhs block, when each block is 128 MB.?

Actually it's:

Thank you. Consider each hadoop block size as 128MB.

1TB = 1000000 MB

128MB = 1 block

1000000 /128 = 7813 blocks.

@@sravanalakshmipisupati6533 it’s not 84 lakhs na.

You said its around 84 lakhs block.

Am I right or missing something?

1TB =1048576 MB

1048576/128 MB= 86317 block

Its 86thousand 3hundred and 17 block

@@Amarjeet-fb3lk yes, you are correct.

when we use off-heap memory GC will not be used?

By using off-heap memory, Spark aims to reduce the impact of garbage collection pauses, which can be a significant issue in distributed data processing. However, you should still be aware of the memory usage and configure off-heap memory appropriately to avoid memory-related issues. Garbage collection may still occur for on-heap memory in your Spark application, but off-heap memory management helps mitigate some of the typical JVM GC-related problems.

@@sravanalakshmipisupati6533 thanks. Now its clear.