Spark Interview Question | How many CPU Cores | How many executors | How much executor memory

ฝัง

- เผยแพร่เมื่อ 19 ก.ค. 2024

- Learn Data Engineering using Spark and Databricks. Prepare for cracking Job interviews and perform extremely well in your current job/projects. Beginner to advanced level training on multiple technologies.

Fill up the inquiry form, and we will get back to you with a detailed curriculum and course information.

shorturl.at/klvOZ

========================================================

SPARK COURSES

-----------------------------------------------

www.scholarnest.com/courses/s...

www.scholarnest.com/courses/s...

www.scholarnest.com/courses/s...

www.scholarnest.com/courses/s...

www.scholarnest.com/courses/d...

KAFKA COURSES

--------------------------------

www.scholarnest.com/courses/a...

www.scholarnest.com/courses/k...

www.scholarnest.com/courses/s...

AWS CLOUD

------------------------

www.scholarnest.com/courses/a...

www.scholarnest.com/courses/a...

PYTHON

------------------

www.scholarnest.com/courses/p...

========================================

We are also available on the Udemy Platform

Check out the below link for our Courses on Udemy

www.learningjournal.guru/cour...

=======================================

You can also find us on Oreilly Learning.

www.oreilly.com/library/view/...

www.oreilly.com/videos/apache...

www.oreilly.com/videos/kafka-...

==============================

Follow us on Social Media.

/ scholarnest

/ scholarnesttechnologies

/ scholarnest

/ scholarnest

github.com/ScholarNest

github.com/learningJournal/

======================================== - วิทยาศาสตร์และเทคโนโลยี

Very well explained. Thank you so much.

Excellent . Thank you sir

Awesome explanation.

Thanks Sir!

Very well explained

Sir purchased your course in 2020

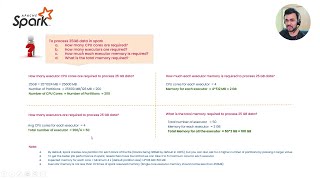

With this formula,

Memory of Executor = 2.5GB~3GB always

For X size of data,

No. of Cores = 8*X

No. of Executors = 8/5*X

can u explain about the seralization with example in spark that is used with profer results

Hi , what if the file is in different storage location and the cluster manager is different from YARN ? how to calculate.

Hi , Is 4x a kind of standard ?. Please confirm

What if the cluster size is fixed? Also ,shouldn't we take into account per node constraint? For eg: what if the no. of cores in a node is 4?

Hi - amount of memory

In this case 3gb always for all size of data ? I think we have to tweak as per the size of data

For the same 10 GB file suppose we have following resources:

38 GB worker memory with10 cores, 8gb driver memory with 2 cores, manually configured schuffle partitions - 80.

How will it behave?

in last question each and every value you took was default only (128mb, 4, 512mb,5 cores) , so lets say the question is for 50 gb of data then still 3gb would be the answer?

Great .but follow up question for this by interviwever is s how do we take 4x memory per executor.

Spark reserved memory is 300 mb in size and executor memory should be atleast 1.5X times of the spark reserved memory, i.e. 450 mb, which is why we are taking executor memory per core as 4X, that sums up as 512mb per executor per core

Datanode = 10

16 CPUs / node

64 GB Memory / node Please tell me cluster config we are going to choose ?

sir, is there any way to get Databricks certifications vouchers?

+1

If no. of cores are 5 per executor,

At shuffle time, by default it creates 200 partitions,how that 200 partitions will be created,if no of cores are less, because 1 partition will be stored on 1 core.

Suppose, that

My config is, 2 executor each with 5 core.

Now, how it will create 200 partitions if I do a group by operation?

There are 10 cores, and 200 partitions are required to store them, right?

How is that possible?

You can set the no of partitions equal to no. of cores for maximum parallelism. ofcourse, u cannot create 200 partitions in this case

How did you assume that each core will require 4x the partition size ?

He's an indian, god spoke to him

Sir what if if we are reading 100GB file in that case number of executor will be 160 . Do you think 160 executor will be correct one here

Good question

I Tried same with below configuration

Question - If you have 100 GB of Data, how many Cores and Number of Executor you required (Considering we have only 50GB of RAM, 40 Cores in Total)

- The default Partition Size is 128 MB, 100 GB total means 102400 MB. So Total partition will be 102400/128 = 800 Partition

- To achieve highest parallelism we need to have similar number of Cores as Partitions. But we don’t have 800 Cores. The recommended cores per executor is 5 for better IO in HDFS.

- So, 40/5 = 8, so we can make up to 8 Executors.

- For this 8 Executor we have distribute Memory equally, it will be 50/8 = 6.25 ~ 6 GB per Executor.

- So, in final we have 8 Executors with 5 Core each.

I will take some times to run all Data

Can you please explain why 4x memory required for each core

The basic idea here is that when we read compressed parquet files and load them into memory, they tend to expand. In general, we assume that the data can increase in size by about three to four times once it's uncompressed in memory. That's likely where this number comes from.

Thanks for your reply

i have applay 4x memory in each core for 5Gb file but no luck can you please help me to how to resolve this issue

Road map:

1)Find the number of partition -->5GB(10240mb)/128mb=40

2)find the CPU cores for maximum parallelism -->40 cores for partition

3)find the maximum allowed CPU cores for each executor -->5 cores per executor for Yarn

4)number of executors=total cores/executor cores -> 40/5=8 executors

Amount of memory is required

Road map:

1)Find the partition size -> by default size is 128mb

2)assign a minimum of 4x memory for each core -> what is applay ???????

3)multiple it by executor cores to get executor memory ->????

10240/128 is 80 , not 40

2) 4 times x 128 mb block = 512mb needed per core

3) 512mb x 5 cores of an executor = 2560mb is required per executor

Conclusion of my understanding from this video is whether it is 10gb or 5g or anything is data size, you always mention executor-cores=5 and executor-memory=3g (i.e. round of 2560mb)

Hello sir how to process 100 gb data . How can we calculate memory and executor and driver pleas help me .

Did you find the answer ? I'm interested

16 executors. Each with 5 cores and 3 gb ram.

In each executor how much data can be cached.

How much data can be processed.

What about shuffling.

For narrow and wide transformations.

Any out of memory issues.

Do you really think total 80 cores and 3*16 = 48gb ram required to process 10gb data.

Please give complete answer sir.

That's how the formula works for maximum parallelism and doing everything in one shot.

You can run this on a single executor with 5 cores and 3 GB memory. It will work smoothly.

@@ScholarNest at 4:37 seconds, we are assigning a minimum of 4X memory for each core, how did we come to this number of 4X? why not some other number ?. Can you please answer sir.

We gave 4 times memory to each core than the size of partition. Think it like 4 portions we have done to the complete memory of 1 core. So in 1 portion data will be sitting and in the remaining 3 portions of each core will work out the other aspects and hence will not cause OOM at the executor. If you still find out that it is not suggest, use a bigger number as multiple factor, like instead of 4, use 5 times in memory allocation formula.

@@vinayak6685 If you see the spark memory distribution, you will find spark execution part of an executor gets only 1/3rd of the executor memory. And if we add off heap memory, generally it will use only 1/4th of the executor memory.

The same thing will replicate for cores as well.

@ScholarNest sir, correct me if I am wrong.

why 4x memory is required for each core

The basic idea here is that when we read compressed parquet files and load them into memory, they tend to expand. In general, we assume that the data can increase in size by about three to four times once it's uncompressed in memory. That's likely where this number comes from.