AWS Data pipeline - S3, Glue, Lambda, Airflow

ฝัง

- เผยแพร่เมื่อ 22 ก.ย. 2023

- Project Credit: João Pedro

Tools to be used for the project

S3 to upload data and create different folders for different reasons

Lambda for extraction of data from pdf to raw json format

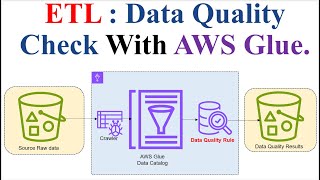

Glue for processing of data to get the questions from the data

Airflow: This is a workflow orchestrator. It’s a tool to develop, organize, order, schedule, and monitor tasks using a structure called DAG (Direct Acyclic Graph), The DAGS are all Python code.

The data: The data is from the Brazillian ENEM (National Exam of High School, on literal translation). This exam occurs yearly and is the main entrance door to most public and private Brazilian universities. We will use this data to do some data extraction and get questions from the exam.

Steps:

Create the airflow environment by running: docker compose up (make sure you are in the path where the docker compose file is found. Access Airflow through: localhost:8080)

Create an S3 bucket called primuslearning-enem-bucket (give a suitable name for your use case)

Create an IAM User called primuslearning-enem and grant it admin permissions and save the access keys.

In the airflow UI (localhost:8080), under the admin-connections tab, create a new AWS connection, named AWSConnection, using the previously created access key pair.

Uploading files to AWS Using Airflow: Create a Python file inside the /dags folder, I named mine primuslearning_process_enem_pdf.py

Create a ‘year’ variable in the Airflow UI (admin - variables). variable simulates the ‘year’ when the scraping script should execute, starting in 2010 and being automatically incremented (+1) by the end of the task execution.

Create a new Lambda function from scratch, name it process-enem-pdf, choose Python 3.9 runtime. lambda will automatically create an IAM Role. Make sure this role has the read and write permissions in the primuslearning-enem-bucket S3 bucket. Increase the execution time to about 4 mins to the lambda.

Create a Python virtual env with venv: python3 -m venv pdfextractor

Activate the environment and install the dependencies : source pdfextractor/bin/activate pip3 install pypdf2 typing_extensions

Create a lambda layer and upload to lambda by running: (This has already been done, to ease your work. Just upload the archive.zip file as a layer to aws. bash prepare_lambda_package.sh

Add an S3 Trigger to the lambda function, make sure the suffix is .pdf and the events types: All object create events

Create a glue Crawler to create a catalog of the dataset. Name it: primuslearning-enem-crawler and make sure to select the bucket up to the content folder. Make sure an IAM role is created and also create a database with the name: enem_pdf_project

Create a glue job named: Spark_EnemExtractQuestionsJSON and paste the code on process_pdf_glue_job.py and execute from airflow for the complete pipeline to be in action.

Make sure to delete all your processes afterwards to avoid the bills

Pipeline repository: github.com/Primus-Learning/pi...

website: primuslearning.io

Contact: contact@primuslearning.io

LinkedIn: / primus-learning

#aws #devops #primuslearning #python #airflow #s3 #glue #howto #how #awssolutionsarchitects - วิทยาศาสตร์และเทคโนโลยี

Hi! For anyone with the same problem in 35:20 you need to create the variable year and set one value in order to allow the first task run seamlessly otherwise it will fail. The process to create a variable is showed a little bit later in 37:50. Good project, Thanks you for the video!

Thank you so much for helping out with this, Jorge. I really appreciate it. Thanks for watching.

Hi Primus, Your video is very informative as usual💯💯. Thank you 🙏

My Pleasure sir, thank you for watching.

Hi Primus, thank you very much for this tutorial. I have similar set up but I don't have any write permissions. When I need to create a file I have to type sudo touch file.py, when I want to save my file.py I need to press a button "run as sudo". Question: do you know how to avoid this?

Hi, thanks for your question, I think it depends on your setup and requirements. What system are you running on? The location from where you are running your files or creating your files. You may need to grant write permissions to the user you're using. That should solve the problem. But if you cannot, its not a problem using sudo.