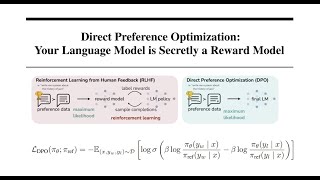

Direct Preference Optimization: Your Language Model is Secretly a Reward Model | DPO paper explained

ฝัง

- เผยแพร่เมื่อ 24 ก.ค. 2024

- Direct Preference Optimization (DPO) to finetune LLMs without reinforcement learning. DPO was one of the two Outstanding Main Track Runner-Up papers.

➡️ AI Coffee Break Merch! 🛍️ aicoffeebreak.creator-spring....

📜 Rafailov, Rafael, Archit Sharma, Eric Mitchell, Stefano Ermon, Christopher D. Manning, and Chelsea Finn. "Direct preference optimization: Your language model is secretly a reward model." arXiv preprint arXiv:2305.18290 (2023). arxiv.org/abs/2305.18290

Thanks to our Patrons who support us in Tier 2, 3, 4: 🙏

Dres. Trost GbR, Siltax, Vignesh Valliappan, @Mutual_Information , Kshitij

Outline:

00:00 DPO motivation

00:53 Finetuning with human feedback

01:39 RLHF explained

03:05 DPO explained

04:24 Why Reinforcement Learning in the first place?

05:58 Shortcomings

06:50 Results

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔥 Optionally, pay us a coffee to help with our Coffee Bean production! ☕

Patreon: / aicoffeebreak

Ko-fi: ko-fi.com/aicoffeebreak

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔗 Links:

AICoffeeBreakQuiz: / aicoffeebreak

Twitter: / aicoffeebreak

Reddit: / aicoffeebreak

TH-cam: / aicoffeebreak

#AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research

Video editing: Nils Trost

Music 🎵 : Ice & Fire - King Canyon - วิทยาศาสตร์และเทคโนโลยี

![รวมเพลงลูกทุ่ง 6 หนุ่มไทบ้าน ฮิตติดกระแส ชุดที่ 1 l มนต์แคน ไมค์ ไผ่ เสถียร ไหมไทย พี [Longplay]](http://i.ytimg.com/vi/GD2v1OjMw5I/mqdefault.jpg)

Great explanation, easy to follow through, pretty simplified to understand

Wow, two videos in one week? You're spoiling us!!

On why RLHF came first, it was invented by OpenAI, which had focused almost exclusively on RL stuff prior to GPT. "When all you have is a hammer..." as the saying goes.

I have not read the paper yet, but this sounds like supervised contrastive learning. If it is, then it's really astonishing that nobody came up with it before. I implemented some supervised contrastive learning myself... missed opportunity 😢

Exactly! Accept this coffee for consolation .

@@AICoffeeBreakthanks! ❤

I really enjoy your videos. Please keep up the good work! My theory for no one thought of this is your first reason; they thought there is no closed form loss function. That is where RL comes in.

Thank you!

These videos are great! Really well explained, thank you so much for the effort you put into them :)

Thanks, this means a lot to us!

Thanks for the video! Very well explained, I just began looking into DPO and your video gives a great context.

Really excellent breakdown of DPO!

Given what we’ve seen with gains from the use of DPO in the open source community, it makes complete sense that it was at least one of the runners up at NeurIPS this year.

I’m really enjoying your explainer videos! Thank you for taking the time to make them!

Thank you so much for your positive feedback!

Good video :) really enjoyed watching it

Love your videos, thank you

Thanks for. pretty useful and timely explanation

Thank you so much for such clear high level explanations.

Thank you for your visit and wonderful comment!

Great video and wonderful explanation. Thanks for covering the differences and thoughts about the limitations of just using DPO.

I am wondering why instruction finetuning was not mentioned? Wouldn’t SFT make the whole DPO process more efficient? Especially when sampling directly from a pre-trained model, it should be hard to even get good samples when the model hasn’t yet learned what questions and answers look like? No?

Instruction tuning is a separate procedure. It is normal supervised learning with task descriptions and it is now independent of the DPO vs RLHF discussion. We mentioned it in another video and left it out for this one focusing specifically on DPO.

Great overview and comparison!

Glad you like it!

Thanks!

Wow, thanks!

I’m no expert, but when RLHF was new, the most common justification I heard in explainer articles and videos was that the reward model was smaller than the LLM, so less likely to overfit on the human labels, and could be used to produce more data for the LLM to train on compared to just the expensive human-annotated data. So pretty much your second hypothesis.

Great video

Thanks!

Hi, just came here cause I saw you´re a member of Sabine´s channel. Wow, did not expect a successful channel here too. Your videos are made very good, though computer science is not my field. I´ll recommend your channel, all the best.

Hi, yes I am a huge fan of Sabine's channel as I studied physics in my bachelor's and master's. I watch her channel to stay up to date with what happens in physics. I also love the other topics she addresses, her takes on things, and the humour in her videos.

Lovely that you found my channel and I'm glad you wrote to say hi. 😁

@@AICoffeeBreakt

Thanks for your attention, surely we meet again 🙂

Thank you so much for your clear explanation ~~ it is really helpful :)) hope you review other NeurIPS papers haha THanks ~~~

Glad it was helpful! Do you have a concrete paper recommendation from NeurIPS? :)

@@AICoffeeBreak So happy to leave the comment. Hope you're doing awesome! I stumbled upon this paper, 'Abide by the law and follow the flow: conservation laws for gradient flows,' and it's blowing my mind. 🤯 It got spotlighted at DL theory's oral session.

I tried diving into it and even watched the presentation, but I'm kinda lost. The concept of conservation laws and the Lie algebra algorithm is intriguing but tricky for me.

Thanks for you interest ~~~~

What about explanation video about MoE arch (mistral)?

Oh, interesting! I thought there were enough blogs and explainers about MoE and decided not to cover it . Now I consider it again. :)

I second this, even if there are explanations out there, it’s more about discussing with someone we’re interested in discussing it with

@@rufex2001

yeah agree with this, IMO since you are more technically grounded than other channels

Great video! Got a follow up question: what kind of finetuning is the finetuning provided by the openai API, where it finetunes a model based on a training set of Q&A pairs provided by the user?

Ask Sam Altman? 😅 I didn't see any recent technical paper about fine-tuning from OpenAI, nor do they explain on their website what they do. They are too open for us to comprehend.

Since they have large GPUs, it is safe to assume they are not forced to do parameter-efficient tuning like us noobs with Gaming GPUs.

So the main logic lies in the custom loss function, which is calculating higher loss for next token if it is far from the positive example?

Another question I got when reading the paper subtitle "Your Language Model is Secretly a Reward Mode" was, in what way do they mean the language model is a reward model? To me it seems like they're not using a reward model at all, because they figured out that after starting to use a contrastive loss, they don't need one.

Great observation! I do not have a good guess on this, especially since even in RLHF, the model itself "is" (rather becomes) a reward model because the reward model is initialized with a copy of the original LLM. Now, they do not do reward modelling at all, so nothing is secretly a reward model. Maybe they just wanted a catchy title?

Or maybe "secretly" is a catchy way of saying "implicitly". Because implicitly, the language model itself can increase the reward by maximizing the likelihood of preferred examples and minimizing the likelihood of dispreferred examples. So implicitly, there is a "reward" defined by the likelihood and it comes directly from the language model (not from a reward model).

@@AICoffeeBreak Yes, maybe that's what they meant! It would make sense.

Great video! Question: is it possible to train an LLM with human feedback that is more complex than a simple positive/negative? For example, my data has 10 different possible values for feedback

Three values would still be possible with a triple loss. But for 10, I wouldn't know how to implement it contrastively. :(

How about if a model was trained using existing apex LLM i.e. gpt4 to distil a model that can consume content about a topic like an article and to be able to generate questions that may or may not be covered in the content/article, this model would be akin to reward model, but would help train the model to become more logical in its answer about the topic, together with reward model would this allow an end to end training process to generate an LLM expert in a specific field? or I guess similar methods already exist...

Does this only apply to transformers or would it also work with Mamba?

This training procedure is architecture independent, so I would say it works with Mamba as well. But of course, one would need to try it out as ML is a very empirical science. 🔭

It appear to me that like this method is superior to RLHF because it demands for good curated human annotated examples. Did I miss something?

RLHF also trains on human annotations.

@@AICoffeeBreak Thanks for the comment. I see... it is essentially equivalent but more efficient since you don't have to train an extra model.

@@Micetticat exactly! 🎯

I am a newbie. I don’t understand how evaluating the paper’s results with GPT-4 is considered formal, rigorous analysis. Do you feel comfortable with it ? There should be at least a claim about DPO error rate in terms of GPT-4 error rate.

I completely understand your concern and there is no need to debate that a thorough human evaluation is preferred. Such human evaluation is costly though, so to the author's defence: they did a small human study of their GPT-4 evaluation and showed that humans agreed with GPT4 as much as they agree with each other.

Also, LLMs are much better at evaluating things than at generating, in the same way in which it is easier for you to correct a thesis than to produce it.

I was wondering, can we apply a similar concept of DPO to a more generic ranking task? This made me think about how triplet loss is equivalent to a softmax loss (in Soft Triplet Loss), but DPO seems to only deal with one dimensional ranking. What if instead of ranking the output relative to each other as better, or worse. Can we apply the model to generate multiple aspect instead i.e. non-binary ranking?

"Exploring connections between DPO and other ranking methods like Borda ranking or Condorcet voting could be fruitful for developing more sophisticated preference learning frameworks." by Google Bard

@thipoktham5164 I think it should be possible to adapt the loss function that way.

@leeme179 😂 lol

Wow! DPO was really simple. If its loss function is equivalent to RLHF, does that mean it theoretically will give as good results as RLHF, or can we draw some other conclusion from it? Good video explanation btw!

Yes, DPO and RLHF should be equivalent as long as you apply them on the same pool of human feedback and you do not use the reward model to annotate more data. Because this is the upside of training a reward model: after having been trained on human labelled pairs, it can annotate on its own more data and simulate human feedback .

Also, a reward model has the upside that we can train it on more fine-grained human annotations (which have been shown to improve RLHF), such as 3-way ranking. DPO works on just pairwise ranking and would have to be adapted for a more fine-grained setting.

@@AICoffeeBreak Thanks for you answer! It makes sense that they are equivalent only if you don't use it to annotate more data, since as you mentioned, RLHF can lead to award hacking (I guess only in those cases where you do automatically annotate more data). What is 3-way ranking?

@@kristoferkrus when you have three answers and humans annotated which one is the best, the second best and the third best.

It's more fine-grained than just saying which one of two answers is best.

@@AICoffeeBreak Makes sense. Thank you! But I guess you could use a modified DPO loss for a three-way ranking where you take into consideration all three likelihoods. If L(A, B) is the DPO loss function when you have two pieces of generated text with likelihoods A and B, respectively, where the first piece is preferred to the second, and now you instead have A, B and C, I guess you could use something like L(A,B,C) = L(A,B) + L(B,C) (+ L(A, C)).

we can train the reward model to get us scores and use them in DPO too? This would let us avoid the messy RL procedure. Would also be interesting to see if a ranking loss instead of contrastive loss do performs with DPO. @@AICoffeeBreak

How DPO works under the hood: th-cam.com/video/Ju-pFJNfOfY/w-d-xo.html

Thanks for the video. I thought you were part of the chair tbh ^^

I am! Did you ever see me stand? 😅

Sometimes when u are talking..it's looks like you are about to bust out laughing or like you are trying to keep a straight face😂

I feel like you are too obviously reading a script on your screen, maybe you can seem more natural? Keep it up!