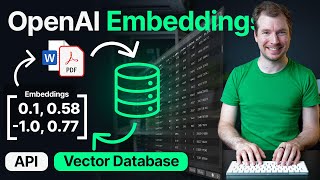

How to build chat with your data using Pinecone, LangChain and OpenAI

ฝัง

- เผยแพร่เมื่อ 9 ก.พ. 2025

- Free walkthrough: zackproser.com...

Paid step-by-step tutorial: zackproser.com...

I show step by step how to build a Chatbot using Pinecone, LangChain and OpenAI in this easy to follow tutorial for beginners.

I ingest my entire blog full of MDX posts, chunk and convert them to embeddings using LangChain and OpenAI. Then I upsert them into my Pinecone vector database to build a knowledge base that our chatbot can use to answer questions.

I build everything using a Jupyter Notebook to make it extremely easy to follow along.

awsome , very nice brother , thanks for such a work flow demostration , tysm

@Arifkoyani Thanks so much for watching and your kind words 🙏

Glad you liked it

All the best

Your tutorial was very helpful. Please keep up the good work.👍

Thanks so much 🙏 I will!

Awesome vid!

Thank you so much 🙏

Really great tutorial, thanks a lot!

Thanks so much for the feedback and support 🙏 Glad it was useful. LMK what else you'd like to see in the future.

@@zackproser I still haven't thought on a specific issue, but I will be glad to update you when I'll have...

OpenAI v2 has vector store feature now. It automatically splits into chunks and creates embeddings. Is there a way to use that instead of Pinecone and langchain?

Hi @RajPatel-d4u and thanks for your question! Ah I wasn't aware of that yet, but it makes sense and I'm guessing it's an extension of the vector datastore they already had for processing the documents of the custom GPTs - yes, so long as their API supports query methods, you should be able to swap that in instead. I may do another video in the future examining that in more detail.

example tutorial, very clear and useful

Thanks so much 🙏 Glad you found it useful. Stay tuned for more.

Appreciate this video man. Are you able to create a video similar to this but for a very beginner. I want turn a video into embeddings and upload those embeddings into a Vector database on Pinecone but I have no clue how to do it. Thank you :)

Thanks, Brent! I actually have a free walkthrough post: zackproser.com/blog/langchain-pinecone-chat-with-my-blog as well as a paid step-by-step tutorial: zackproser.com/blog/rag-pipeline-tutorial

The paid tutorial includes: a Jupyter Notebook that demonstrates the data processing phase, and shows you how to put everything in Pinecone, plus a full example site that demonstrates the application consuming the vector store. It also walks you through how everything fits together.

I'll try to do another video on this piece soon. Thanks so much for watching and for your helpful feedback!

Zachary if I have to add the api key directly not from the environment, then where will I put it in your above code?

Hi Usman,

Thanks for your question! Are you saying that you're not able to export an environment variable that contains your key? In a Jupyter notebook host like Google Colab or Kaggle, you can use their secrets integration to set your Pinecone or any other API key. You then reference the secret using their library. Here's a link to a ton of example notebooks where we demonstrate this pattern: github.com/pinecone-io/examples

Let me know if that's what you mean or not!

Best,

Zack

@@zackproser I want to ask that setting the pinecone api key in an environment variable is the only way to include the pinecone api key in the code.

can i make a variable as api_key and equals it to actual api key in the code and then inset it in the pinecone???

@@usmantahir2609 you could also hard code your API key in your call to instantiate the Pinecone client, but I wouldn't recommend that from a security perspective

@@zackproser I checked about hard code of API but it was not working as expected., can you share the doc for that ? thanks

@@roopeshk.r3219 @zackproser exactly I am also facing this problem

@roopeshk.r3219 can u tell me ur linkedin?

HI, thanks for the video, could you help me with this?

1: how do we prompt this agent?

2: how can i add this to a website? slack? etc.

Hi! My pleasure.

The prompt is fully visible here: zackproser.com/blog/langchain-pinecone-chat-with-my-blog

^ This link is to the free version of the walkthrough which still provides code but is specific to my website. You can see the context (related items / posts) is first fetched from Pinecone, and then the entire prompt - plus these items - is sent off to OpenAI for the generation and the response is streamed back.

If you want a step by step tutorial that provides the Jupyter Notebook to do the initial data processing and a full example site to show the API route (which contains the prompt for OpenAI's LLM) and the full UI, this is the one you want: zackproser.com/blog/rag-pipeline-tutorial

Hope that helps and let me know if you have other questions.

how to update data? do i have to delete old index and put new embedding ??

Great questions - thanks for asking. I'll try to create videos + content for this shortly.

@zackproser I'm waiting... thank you in advance.

That's great content. How would you make the model have a memory on the chat?

Thanks so much for your feedback 😃 Great question - the TLDR is that you keep an ever expanding array of messages and pass them back and forth between the LLM and user each time. I may add an example of this in the future. You could also use a vector db to store the history and query it at inference time....

Please provide document llink to understand it more easy and it takes less time to users to know more about langchain and pinecone

Hi, thanks for your comment. Did you see the linked Notebook in the comments?

Hello, I could not find the document link. Please provide me here or add it in video description

Great tutorial. I'm curious, how do i store all the messages from users and AI

1. User send a message

Hi @naufal-yahaya - thanks for your support and for your question! Yes, I've recently spoken with a Pinecone developer who is doing exactly that - he shared that vector databases make an excellent place to store conversational history, because retrieval is so fast and accurate, and because you can skip having to send all that data back and forth each time.

While creating the RetrievalQA, it shows this error of not being able to instantiate abstract class BaseRetriever with abstract methods _aget_relevant_documents, _get_relevant_documents

Hi, did you use the same Notebook I linked?

@@zackproser no , I'm using the same code for rag pipeline

Is it solved ?

Did you put all of your blog posts in github first?

My site is fully open source, which simplifies the data processing steps, because you can clone the whole data source with a single git command.

Hi,

Can you share the link to the Notebook?

Hi - sure - here's the two tutorials associated with this video - which both have Jupyter Notebooks:

Free: zackproser.com/blog/langchain-pinecone-chat-with-my-blog

Paid (more example code + easier to fully run end to end): zackproser.com/blog/rag-pipeline-tutorial

I always find someone has already done it :) world has now more like minded people than ever before like chatbase. One question though why do we need pinecone when we can build that without too for this test?

You're right that you can technically do RAG with any type of database - including relational databases and non-vector databases. That said - vector databases particularly shine at semantic search - meaning searches that get at the actual meaning of your query instead of doing naive keyword matching.

The result is that the end user who is using your system can have that "magic" feeling as if the system truly understands them.

Pinecone specifically is also excellent for starting out and prototyping as well as for high scale + mature use cases because you do everything via API calls - create indexes, upsert vectors, query, delete, etc.

Good question and let me know if you have more!

Can you add link to notebook in the comments

Updated the description!