Information entropy | Journey into information theory | Computer Science | Khan Academy

ฝัง

- เผยแพร่เมื่อ 27 เม.ย. 2014

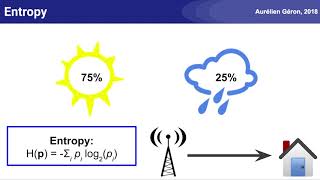

- Finally we arrive at our quantitative measure of entropy

Watch the next lesson: www.khanacademy.org/computing...

Missed the previous lesson? www.khanacademy.org/computing...

Computer Science on Khan Academy: Learn select topics from computer science - algorithms (how we solve common problems in computer science and measure the efficiency of our solutions), cryptography (how we protect secret information), and information theory (how we encode and compress information).

About Khan Academy: Khan Academy is a nonprofit with a mission to provide a free, world-class education for anyone, anywhere. We believe learners of all ages should have unlimited access to free educational content they can master at their own pace. We use intelligent software, deep data analytics and intuitive user interfaces to help students and teachers around the world. Our resources cover preschool through early college education, including math, biology, chemistry, physics, economics, finance, history, grammar and more. We offer free personalized SAT test prep in partnership with the test developer, the College Board. Khan Academy has been translated into dozens of languages, and 100 million people use our platform worldwide every year. For more information, visit www.khanacademy.org, join us on Facebook or follow us on Twitter at @khanacademy. And remember, you can learn anything.

For free. For everyone. Forever. #YouCanLearnAnything

Subscribe to Khan Academy’s Computer Science channel: / channel

Subscribe to Khan Academy: th-cam.com/users/subscription_...

I remember my teacher in high school defined entropy as "the degree of randomness". I decided it was an abstract concept that I don't get. Now learning about information entropy in my master's class, I found this video and I'm so glad I did!! Thanks, it's very well explained :)

good video. i like the way it shows the intuition behind the concept, that is the reason why the concepts actually exists rather than plainly defining it and then showing its properties.

couldn't agree more

I guess Im kind of randomly asking but do anybody know of a good site to watch newly released series online?

@Amir Izaiah Flixportal :D

@Andrew Randall Thank you, I signed up and it seems like a nice service :) I appreciate it !!

@Amir Izaiah you are welcome xD

This is one of the most informative -- and I use that term advisedly -- videos I have ever seen. Thank you!

at 1:24, i would argue the 3rd question (ie, the question on the right of 2nd hierarchy) should be

"Is it C?" (or "Is it D?")

rather than "Is it B?"

(i think this is so because, as the 1st machine answered "No" to the 1st question ["Is it AB?"], it essentially rules-out both A and B, leaving only C(or D) as the possible outcome; hence no role for "B" anymore)

yea, I agree with you.

dam, so many mistakes in this video, 1:24 and 3:50. makes me question their reliability... good video though

I thought I understood wrong lol.

Thank you!

But here the probability of D is 25% which Is more than 12.5%, so in the second they ask is it D? .

this video blew my mind away. Thank you! I love these intelligent yet fun videos!

What a formidable way of visualizing and introducing information entropy. Your contributions are deeply appreciated

Awesome explanation with a very intuitive example.Thanks a lot...

Amazing video. Seldom seen a better explanation of anything. Thanks!

Thank you. Explains the intuition behind Entropy very clearly.

Ingenious interpretation! I applaud!

Wonderful idea of "bounce" that express the amount of information. It's so exciting.

I have one question:

Say p(A)= 0.45, p(B)=0.35, p(C)=0.15, p(D)=0.05, then 1/p(D)=20, log base 2 of 20 = approx 4.3, however, number of bounces should remain 3 is it? Would anyone mind explaining this possible difference? Thanks a lot!

Perfectly well explained. The best video on information entropy I’ve seen so far

This is such a great way to explain information entropy! Classic!

The concept had been presented to me on some online course, but until this video I didn’t really understand it. Thank you!

1:24 You are asking same question twice because you already asked "Is it A or B" in the root if the answer is no that means "it will be either C or D" but you are asking again whether it is B, or not in the sub branch. It should be either "Is it C" or "Is it D".

yes, you are correct

note: number of bounces

- entropy is maximum when all outcomes are equally likely . when introduce predictability the entropy must go down.

thanks for sharing this video! God bless you!🎉

What a Beautiful Explanation!!!

I have asked several professors in different universities and countries, why we adopted a binary system to process information and they all answered because you can modulate it with electricity, the state on or off. This never satisfied me. Today I finally understand the deeper meaning and the brilliance of binary states in computing and its interfacing with our reality.

Brilliant explanation. So simple yet so profound. Thanks!

Loved the piano bit towards the conclusion!

Yessss I finally got the concept after this video.

Wow, what a presentation!

Huh??? Why am I finding out that information entropy was a concept. MIND BLOWN!!!

this is wonderful. thank you

Best explaination , salute ....

Great video! I totally understood entropy!

What a video!!....This is how education should be.

It was perfect. thx

Very comprehensible thank you !! it very helpful

Perfect explanation! :)

I found a few errors. Am I the only one seeing this?

why can't textbooks or lectures be this easy

Tell me about it😢

Makes it all so super clear and easy to follow. Love this.

Just a tiny error at 3:50 - the final calculation shld be 0.25*2.

This video has explained entropy better than any teacher I've had in my entire life. It makes me so angry to think of all my time wasted in endless lectures, listening to people with no communication skills.

Kudos for linking number of bounces -> binary tree -> log. And overall very nice explanation. That's like 3rd explanation for info entropy i liked.

The most intuitive explanation

Really good one, thanks!

great explanation bro :)

The most beautiful explanation of entropy

Thank you so much. It explains the entropy so well.

just amazing.

just awesome

I can't imagine how someone could ever come up with such abstract ideas.

Excellent video. I think one point mistakenly refers to "information" when the author means 'entropy.' Machine 2 requires fewer questions. It produces more information and less entropy. Machine one produces maximum entropy and minimum information. Information is 'negative entropy.'

awesome video!

Great explanation.

nice!

I loved this surreal music and real life objects to move in a grey 60s like atmosphere.)

This should have more likes!

قوة في الشرح و وضوح

you are truly a genius.

Thank you!

Thank You

como se calcula a entropia de um texto? e o que podemos fazer com isso?

Now I understand decision tree properly

The 'M' and 'W' are switched and upside down while the 'Z' is just a sideways 'N'...my vote is intentional 6:32

so just to clarify, is the reason the decision tree for machine B is not the same as for A as you ask less questions overall? and how do you ensure that the structure of the decision tree is such that it asks the minimum number of questions?

Isn't there a mistake at 3:50? Shouldn't it be 0.25 x 2 instead of 0.25 x 4?

+Francesco De Toni yup!

Yes, it's 2.25 I guess

It's indeed a mistake

@@sighage no it's 1.75, just there was a typo. you get 1.75 with 0.25 x 2

can't we just ask one question?

is it abc or d ?

edit: nevermind, i just figured that 1 bit removes uncertainty of 1/2

Great video but is it just me or there is an error on 3:49. The correct calculation for the number of bounces should be 0.5*1+0.125*3+0.125*3+0.25*2 = 1.75 instead the video shows 0.5*1+0.125*3+0.125*3+0.25*4 =2.25? Any thoughts?

I was just thinking this. Thank you for pointing it out. I thought maybe I misunderstood something fundamental.

Nice!

Is there a direct analogy for the second and third law of thermodynamics and the information entropy?

Great video! I can follow it but I have trouble understanding the problem statement. Why "the most efficient way is to pose a question which divides the possibility by half"?

Too late, but maybe because we're trying to ask the minimum no. of questions (and therefore going with the higher probability first)?

Another way to look at entropy: Measure of distribution of probability in a probability distribution.

Good explanation! If I wanted to calculate the entropy with log2, which calculator can do this? Is there an online calculator for this? What would be the best approach?

Hans Franz Too late now, but log2 b = ln b / ln 2 or more generally log 2 b = log base a of n / log base a of 2.

Muito Bom

Beautiful

Cool beat

3:51 Typo when computing the entropy for machine 2

previous lesson: th-cam.com/video/WyAtOqfCiBw/w-d-xo.html

next lesson: th-cam.com/video/TxkA5UX4kis/w-d-xo.html

thanks

Could anyone help explain why less uncertainty means less information (Machine 2)? Isn't it the other way round? Many thanks.

there is less certainty in machine 2 because on "average" there will be less questions...meaning after many trials on average there will be 1.75 questions needed to get right result meaning there is less variety, randomness, chaos in machine 2 due to the fact that "A" will be occurring alot more than other letters

+TheOmanzano Yea so since the number of questions we need to ask, to guess the symbol, on an average, is less in machine 2 - so this should imply that machine 2 is giving us 'more' information 'per answer for a question asked' right? I'm really confused at its physical interpretation of information gained.

Right! Got it! So its not that we're getting 'more information per answer', we will be getting same amount of information for each question asked for whichever machine. The fact that we have to ask less number of questions is because there is 'less uncertainty' in the outcome; we already have some 'idea' or 'prediction' for some outcome implying there will be less information gained when the outcome is observed. *phew*

Think of it as a Hollywood film..where a police inspector interrogates a criminal and he must speak truth each and every time. After 175 questions the inspector found out that he knows no more than that, where as when he interrogated other criminal in an adjacent cell he found out that after asking 175 questions he can still answer 25 more..

Now U Tell Me Who Has More Information?

.

.

.

.

U are welcome!! 8)

HIRAK MONDAL That was a great example! Thank you so much!!!

cool

still confused why #outcome=1/pi

because you need to "build" a binary tree to simulate bounces. E.g. you have probability p=1/2 (50%). From that outcome = 1/1/2 = 2. If you have p=1/8 (12,5%), you get outcome = 8. From which you can get the log2, which is basically the level on which the value is in the binary tree.

!Órale!

Why is the number of bounces the log of the outcomes?

So if I recall correctly, the one with the highest entropy is the least informative one, then the, if a machine generates symbols, and apply the formula for each symbol, which symbol provides the most information? the one with the least amount of bits? how does that make sense, isn't it the one with the highest amount of bits? calculated by p log( 1/p)

Why outcome is 1/p?

Possibility of outcome=1/number of possibility

Heckin Shanon

What do they mean by number of outcomes? Can someone give me an example using the ABCD examples they used?

Yes, I asked myself this question and watch it twice. (5:45)

Count the number of branches at the bottom

The number of final outcomes = 2^(number of bounces)

Therefore, the inverse function of exponent is logarithm >>>

The number of bounces =

= the number of questions = log_2 (number of outcomes)

Can anyone explain ,how the answer become 3/2 in solved example ? Any help will be appreciated

👏🏻

What if we used ternary ?

if it makes us ask less questions, doesn't it mean it provides more information?

Did anyone else notice the DEATH NOTE music at 4:40.

I was wondering what was that music, I really like it. Do you have any link I found this th-cam.com/video/hKfKYpba0dE/w-d-xo.html following your comment. Is that it? If so can you point me to the right minute? Tnx

Hello please why does the number of outcomes at a level equal to 1/probability ?

What are some good books on this topic ?

An excellent excellent excellent video. I finally get it.

I don't understand why he always divides by 2 !!

Because the way the questions are framed, allow for only two possible answers - Yes or No

겁나 이해 잘됨

at @3:15 I think there's a typo? The last term should be 0.25*2 instead of 0.25*4 I guess.

The equation in 3:48 should result in 2.25 not 1.75

(0.5*1)+(0.125*3)+(0.125*3)+(0.25*4)= 2.25

I think it should have been

(0.5*1)+(0.125*3)+(0.125*3)+(0.25*2)

Why is entropy and information given the same symbol H, and why does the information formula given in video 5 of playlist include an "n" for the number of symbols transmitted, but this does not?

is it valid to say less entropy = less effort required?

4:45 Markoff or Markov ?

3:51 their is an error. you have Pd*4 term instead of Pd*2.

can someone explain in #bounces=p(a)x1+p(b)x3+p(c)x3+p(d)x2 at 3:44 , how numbers 1,3,3 & 2 came?

x1 is the number of bounces needed to get to point A which is equal to 1 (steps needed for the disc to fall in case A) , x2=x3 is the number to get to points C and B separately and it equals to 3. For x4 it takes 2 bounces to fall in D

Why can't we ask whether it is AB, for the second distribution, same as the first distribution?

OHH i get it. If doing so, the average number of questions we ask will be bigger

In minute 3:51, I guess there is a mistake, for p_D, the value is 2 instead of 4, does not?

Programming Humannity anyone?

Phụ đề Tiếng Việt ở 4:34 sai rồi, máy 2 sản xuất ít thông tin hơn máy 1

how come machine's two entropy is more than one? if entropy's maximum is one