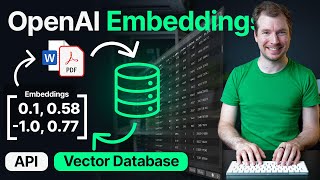

Vector Embeddings Tutorial - Code Your Own AI Assistant with GPT-4 API + LangChain + NLP

ฝัง

- เผยแพร่เมื่อ 7 ก.พ. 2025

- Learn about vector embeddings and how to use them in your machine learning and artificial intelligence projects. Learn how to create an AI assistant with vector embeddings. You'll use OpenAI's GPT-4 API, LangChain, and Natural Language Processing techniques (NLP).

✏️ Course created by @aniakubow

⭐️ Contents ⭐️

⌨️ (00:27) Introduction

⌨️ (01:49) What are vector embeddings?

⌨️ (02:14) Text embeddings

⌨️ (07:58) What are vector embeddings used for?

⌨️ (11:05) How to generate our own text embedding with OpenAI

⌨️ (14:37) Vectors and databases

⌨️ (16:02) Getting our database set up

⌨️ (18:05) Langchain

⌨️ (19:24) Let’s build an Ai Assistant

🎉 Thanks to our Champion and Sponsor supporters:

👾 davthecoder

👾 jedi-or-sith

👾 南宮千影

👾 Agustín Kussrow

👾 Nattira Maneerat

👾 Heather Wcislo

👾 Serhiy Kalinets

👾 Justin Hual

👾 Otis Morgan

👾 Oscar Rahnama

--

Learn to code for free and get a developer job: www.freecodeca...

Read hundreds of articles on programming: freecodecamp.o...

❤️ Support for this channel comes from our friends at Scrimba - the coding platform that's reinvented interactive learning: scrimba.com/fr...