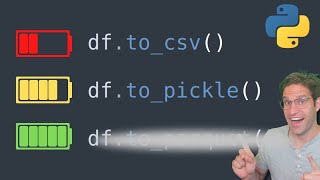

How to work with big data files (5gb+) in Python Pandas!

ฝัง

- เผยแพร่เมื่อ 1 ส.ค. 2024

- In this video, we quickly go over how to work with large CSV/Excel files in Python Pandas. Instead of trying to load the full file at once, you should load the data in chunks. This is especially useful for files that are a gigabyte or larger. Let me know if you have any questions :).

Source code on Github:

github.com/KeithGalli/Data-Sc...

Raw data used (from Kaggle):

www.kaggle.com/datasets/mkech...

I want to start uploading data science tips & exercises to this channel more frequently. What should I make videos on??

-------------------------

Follow me on social media!

Instagram | / keithgalli

Twitter | / keithgalli

TikTok | / keithgalli

-------------------------

If you are curious to learn how I make my tutorials, check out this video: • How to Make a High Qua...

Practice your Python Pandas data science skills with problems on StrataScratch!

stratascratch.com/?via=keith

Join the Python Army to get access to perks!

TH-cam - th-cam.com/channels/q6X.html...

Patreon - / keithgalli

*I use affiliate links on the products that I recommend. I may earn a purchase commission or a referral bonus from the usage of these links.

-------------------------

Video timeline!

0:00 - Overview

1:25 - What not to do.

2:16 - Python code to load in large CSV file (read_csv & chunksize)

8:00 - Finalizing our data

![[ไฮไลต์] แบดมินตันหญิง ไท่ ซือ หยิง vs เมย์ รัชนก อินทนนท์ รอบแบ่งกลุ่ม | โอลิมปิก 2024](http://i.ytimg.com/vi/LUCG0H5qkpQ/mqdefault.jpg)

During my 3 years in the field of data science, this course would be the best I've ever watched.

thank you brother, go ahead.

Glad you enjoyed!

The end of the video was so fascinating to see how that huge amount of data was compressed to such a manageable size.

I agree! So satisfying :)

Since you are working with Python, another approach would be to import the data into SQLite db. Then create some aggregate tables and views ...

glad you are back my man, I am currently in a data science bootcamp and you are way better than some of my teachers ;)

Glad to be back :). I appreciate the support!

Very timely info, thanks Keith!!

If (and only if) you only want to read a few columns, just specify the columns you want to process from the CSV by adding *usecols=["brand", "category_code", "event_type"]* to the *pd.read_csv* function. Took about 38seconds to read on an M1 Macbook Air.

It was quick and straight to the point. Very good one thanks.

No wonder I've had trouble with Kaggle datasets! "Big" is a relative term. It's great to have a reasonable benchmark to work with! Many thanks!

Definitely, "big" very much means different things to different people and circumstances.

Ya, I've been trying to process a book in Sheets, for that processing 100k words, so a few MB, in the way I'm trying to is already too much lol.

Never used chunk in read_csv before, it helps a lot! Great tip, thanks

Glad it was helpful!!

OMG..this is gold..thank you for sharing

You are a star my man... thank you

Well-done Keith 👍👍👍

Thank you :)

great short video! nice job and thanks!

Glad you enjoyed!

Thanks Keith. Please do more videos on EDA python.

Great video! Hope you start making more soon

Thank you! More on the way soon :)

thanks for the great lesson wondering what would be the performance between output = pd.concat([output, summary) vs output.append(summary)?

Pandas have capabilities I don't know it - secret Keith knows everything

Lol I love the nickname "secret keith". Glad this video was helpful!

How would a go about it if it was a jsonlines(jsonl) data file?

Cool videos bro .

Can you address load and dump for Json please :)?

No guarantees, but I'll put that on my idea list!

Why and how you use 'append' with DataFrame? I have an error, when I do the same thing. Only if I use a list instead, and then concat all the dfs in the list I have the same result as you do.

This works fine if you don't have any duplicates in your data. Even if you de-dupe every chunk, aggregating it makes it impossible to know whether there are any dupes between the chunks. In other words, do not use this method if you're not sure whether your data contains duplicates.

why not groupby.size() instead of groupby.sum() the column of 1's?

i have error message on this one. it says 'DataFrame' object is not callable. why is that and how to solve it? thanks

for chunk in df:

details = chunk[['brand', 'category_code','event_type']]

display(details.head())

break

How did you define "df"? I think that's where your issue lies.

Thank you for video, it was really helpfull. But i am still little confused. Do I have to run every big file with chunks, because its necessary or it is just quicker way of working with large files?

The answer really depends on the amount of RAM that you have on your machine.

For example, I have 16gb of ram on my laptop. No matter what, I would never be able to load in a file 16gb+ all at once because I don't have enough RAM (memory) to do that. Realistically, my machine is probably using about half the RAM for miscellaneous tasks at all times so I wouldn't even be able to open up a 8gb file all at once. If you are on Windows, you can open up your task manager --> performance to see details on how much memory is available. You could technically open up a file as long as you have enough memory available for it, but performance will decrease as you get closer to your total memory limit. As a result my general recommendation would be to load in files in chunks basically any time the file is greater than 1-2gb in size.

@@TechTrekbyKeithGalli Thank you very much. I cannot even describe you how this is helpfull to me :).

what about .SAV files ?

I have tried and followed each step however it gives this error:

OverflowError: signed integer is greater than maximum

How big is the data file you are trying to open?

Nice video! Working with big files If a hardware is not at it best means there is much time to make a cup of coffee, discuss the latest news...

Haha yep

but new file have only 100000 , not all info, you ignore other data?

Why did you use the count there

If you want to aggregate data (make it smaller), counting the number of occurrences of events is a common method to do that.

If you are wondering why I added an additional 'count' column and summing, instead of just doing something like value_counts(), that's just my personal preferred method of doing it. Both can work correctly.

@@TechTrekbyKeithGalli Thanks a lot for your videos, bro !!!!