Understanding quantum machine learning also requires rethinking generalization

ฝัง

- เผยแพร่เมื่อ 23 ธ.ค. 2024

- Title: Understanding quantum machine learning also requires rethinking generalization

Speaker: Elies Gil-Fuster from Freie Universitat Berlin/ Fraunhofer Heinrich Hertz Institute

Abstract:

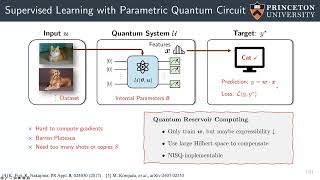

Quantum machine learning models have shown successful generalization performance even when trained withfew data. In this work, through systematic randomization experiments, we show that traditional approaches tounderstanding generalization fail to explain the behavior of such quantum models. Our experiments reveal thatstate-of-the-art quantum neural networks accurately fit random states and random labeling of training data. Thisability to memorize random data defies current notions of small generalization error, problematizing approachesthat build on complexity measures such as the VC dimension, the Rademacher complexity, and all their uniformrelatives. We complement our empirical results with a theoretical construction showing that quantum neural networks can fit arbitrary labels to quantum states, hinting at their memorization ability. Our results do not precludethe possibility of good generalization with few training data but rather rule out any possible guarantees basedonly on the properties of the model family. These findings expose a fundamental challenge in the conventionalunderstanding of generalization in quantum machine learning and highlight the need for a paradigm shift in thedesign of quantum models for machine learning tasks.

arXiv: arxiv.org/abs/...