Stanford CS224W: ML with Graphs | 2021 | Lecture 16.3 - Identity-Aware Graph Neural Networks

ฝัง

- เผยแพร่เมื่อ 1 มิ.ย. 2021

- For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: stanford.io/3bu1hdH

Jure Leskovec

Computer Science, PhD

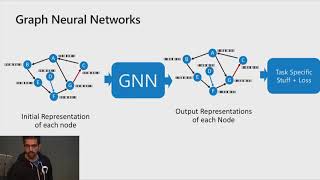

We introduce the idea of Identity-aware Graph Neural Networks (ID-GNN). We first summarize the failure cases of existing GNNs in node-level, edge-level, and graph-level tasks. To overcome these limitations, we introduce the idea of inductive node coloring, where we assign different colors to the node we want to embed vs. the rest of the nodes. We argue that the inductive node coloring can help us overcome the limitations of existing GNNs. Moreover, ID-GNNs utilize this node coloring via a simple version of heterogenous message passing. In summary, ID-GNN presents a general and powerful extension to the GNN framework and is the first message passing GNN that is more expressive than 1-WL test. For more details, you can read a detailed overview of the paper or the full paper here: snap.stanford.edu/idgnn/

To follow along with the course schedule and syllabus, visit:

web.stanford.edu/class/cs224w/

#machinelearning #machinelearningcourse

![[Full Episode] MasterChef Junior Thailand มาสเตอร์เชฟ จูเนียร์ ประเทศไทย Season 3 Episode 5](http://i.ytimg.com/vi/g5wpgeAIzMs/mqdefault.jpg)

I have a question regarding the node coloring. If we color the node we need to have the colored node in the computational graph. This can happen only for the specific classes of the graphs that are either small or that have high clustering coefficients or have high connectivity. Otherwise, we need to use larger k (for k-hops). So this solution is not universal?

From my understandjng thats theoretically correct, but the method makes a difference when its neccessary. The larger and the sparser the graph is, the less likely we are to observe identical neighborhood structure (the failure case we are trying to fix) in the first place. And even then the fast-id-gnn idea of just computing cycles beforehand can help