Everything about LLM Agents - Chain of Thought, Reflection, Tool Use, Memory, Multi-Agent Framework

ฝัง

- เผยแพร่เมื่อ 24 ก.ค. 2024

- How do LLM Agents work?

How does a language model understand the world, and know how to use tools/plugins/APIs?

How can we use LLMs as a System for more complicated tasks?

If you seek to find out the answers to these, this session is for you!

• Everything about LLM A...

~~~~~~~~~~~~~~~~

Slides: github.com/tanchongmin/Tensor...

My own referenced research:

Learning, Fast and Slow: • Learning, Fast and Slo...

LLMs as a System for the ARC Challenge: • LLMs as a system to so...

My own referenced framework:

StrictJSON: • Tutorial #5: Strict JS...

Reference Papers:

Planning:

ReAct: arxiv.org/abs/2210.03629

Reflexion: arxiv.org/abs/2303.11366

SayCan: say-can.github.io/

Tool Usage:

Visual ChatGPT: arxiv.org/abs/2303.04671

HuggingGPT: arxiv.org/abs/2303.17580

Voyager: arxiv.org/abs/2305.16291

Ghost in the MineCraft: arxiv.org/abs/2305.17144

Memory:

Retrieval Augmented Generation: proceedings.neurips.cc/paper/...

Recitation Augmented Generation (change the retrieved memory according to hints): arxiv.org/abs/2210.01296

Knowledge Graph as JSON - Generative Agents: Interactive Simulacra: arxiv.org/abs/2304.03442

Pyschology - Eyewitness Testimony (Loftus et al, 1975) - How memory retrieval is influenced by wording: link.springer.com/content/pdf...

Multi-agent:

AutoGPT: github.com/Significant-Gravit...

BabyAGI: github.com/yoheinakajima/babyagi

Camel - Society of Minds: arxiv.org/abs/2303.17760

ChatDev - Sequential Product Development using Camel: arxiv.org/abs/2307.07924

My relevant videos on LLMs:

How ChatGPT works: • How ChatGPT works - Fr...

SayCan: • High-level planning wi...

OpenAI Vector Embeddings: • OpenAI Vector Embeddin...

Generative Agents: Interactive Simulacra: • Learn from just Memory...

Voyager: • Voyager - An LLM-based...

Ghost in the MineCraft: • No more RL needed! LLM...

LLMs and Knowledge Graphs: • Large Language Models ...

LLM Agents as a System to solve a 2D Escape Room: • LLM Agents as a System...

~~~~~~~~~~~~~~~~

0:00 Introduction

0:38 Story of an Agent

30:40 What are agents?

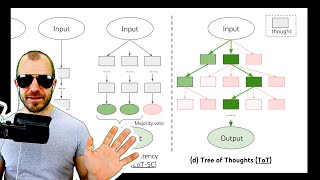

33:52 Chain of Thought to various levels of Abstractions

39:36 Incorporating World Feedback - ReAct and Reflexion

46:36 Voyager - Iterative Prompting with World Feedback

50:36 Tool Usage

1:03:30 Tool Learning and Composing

1:07:52 Memory

1:26:11 Multi-agent systems

1:38:04 Challenges of Implementing Agents

1:48:30 Discussion

~~~~~~~~~~~~~~~~

AI and ML enthusiast. Likes to think about the essences behind breakthroughs of AI and explain it in a simple and relatable way. Also, I am an avid game creator.

Discord: / discord

LinkedIn: / chong-min-tan-94652288

Online AI blog: delvingintotech.wordpress.com/

Twitter: / johntanchongmin

Try out my games here: simmer.io/@chongmin - เกม

![[ไฮไลต์] ฟุตบอลชาย อุซเบกิสถาน vs สเปน รอบแบ่งกลุ่ม | โอลิมปิก 2024](http://i.ytimg.com/vi/2ps5WKV1Mq0/mqdefault.jpg)

Thank you! Excellent teaching style 😊

As always, Fantastic video!!. Your content is just awesome. As you say "Food for thought", your video gives me a lot of content to explore :)

Hope you enjoy the exploration process, come join the discord group for more intriguing conversations!

Agents can eat breakfast three times in a row 😂🎉🎉 great video and accent 😅

1:11:29 The actual cosine similarity formula is Q.K / (||Q|| ||K||). However, since in OpenAI embeddings the magnitude of the Q and K vectors are all 1, we can omit the division

How good is it to train your own, say personal wiki with 3000+ notes that is both personal research and refined information from the internet and chatgpt? My main thing is to have the fine-tuned version of the LLM to output in a very specific format (little text, many technical bullet points and code snippets)?

I've heard a prompt is worth 1000 finetune data points and I'm confident I'm well past that. Is it more sane for a v0 with embedding, fine-tune, or even both?

My general guideline is prompt first before finetuning. If your use case cannot be prompted well even with few shot examples, then fine tuning is the only option.

Fine tuning a model leads to very specialised performance and may not generalise. Great if you are all right with a specialised use case.

Fine tuning plus prompting is the best for specialised use case. You can definitely do both.