Visualizing Convolutional Neural Networks | Layer by Layer

ฝัง

- เผยแพร่เมื่อ 27 ก.ค. 2024

- Visualizing convolutional neural networks layer by layer. We are using a model pretrained on the mnist dataset.

► SUPPORT THE CHANNEL

➡ Paypal: www.paypal.com/donate/?hosted...

These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄

► SOCIALS

X: x.com/far1din_

Github: github.com/far1din

Manim code: github.com/far1din/manim#visu...

---------- Content ----------

0:00 - Introduction

00:30 - The Model

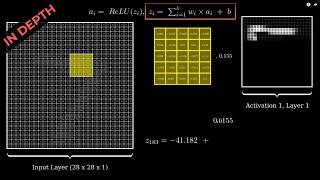

02:03 - Input and Convolution | Layer 1

02:46 - Max Pooling | Layer 1

03:30 - Convolution | Layer 2

04:29 - Max Pooling and Flattening | Layer 2

05:10 - The Output Layer (Prediction)

---------- Contributions ----------

Intro Music: @randomtypebeats7348

Background music: pixabay.com/users/balancebay-...

#computervision #convolutionalneuralnetwork #ai #neuralnetwork #neuralnetworksformachinelearning #neuralnetworksexplained #neuralnetworkstutorial #neuralnetworksdemystified #computervisionandai #animation #animationvideo

WWOWW! I need a video visualizing CNN from layer to layer like that, and I encountered ur channel. The best CNN visualization for me now. Tks u!

Thank you very much for this explanation. I have been struggling to understand how exactly convolutions work, and you explained it perfectly. Thanks keep up the good work bro.

I've been wanting a video like this for a long time, you nailed the balance between showing and explaining. I subscribed and liked the video.

Thank you my friend. Glad you got value out of it! 🔥

This is an excellent video explaining how convolutional layers work at a glance! Fantastically well done!

Literally thank you so much for your video. You don’t know how much relief you brought me in understanding it! Your voice is so clear and your explanations were just spot on and easy to understand. I’ve spent like weeks trying to figure out how the pieces go together and you have completed the puzzle of confusion in my mind. Thank you so much !!!

One of the best video explaining this topic. What a great job and thank you!

Excellent video. We needed this, thank you. Keep it up!

Amazing work! Please continue to make more videos on other ML topics. I find your videos are really helpful to understand the concepts.

This is art! Never delete this video bro!

Amazing visualization! I wish for more short vids like this!

Great video! So good and affordable explanation! Thank you, it helps me a lot!)

This was really helpful....Thank you so much for the vizualization...Keep up the good work...Looking forward to your future uploads.

Man, you're amazing! This is a perfect visualization, thank you God give you health!

Haha, thank you for the kind words my friend :D

Great video, Thank you!

Great video! you got me interseted!

You are fantastic! I look forward to watching your next videos. please produce mooore videos!

Thank you brother. Working on it! 😃🚀

Nice video! Thank you!

Hi there! Pretty cool video :D

One suggestion I would have would be to show how the output looks if we change the input.

For example, at 2:50 and 4:30 a 2x10 and 4x10 grid respectively where the ith column is the layer output for the ith digit.

thank you really needed this

This is my favourite channel. I especially like it when you explain everything so nicely. I wish you a lot of success with the channel and happy life.

That’s great to hear. I wish you all the best! 😃

Great Viz!!!

Nice walkthrough!

I am glad you enjoyed the content!

Bravo dude!

Love your video.

Very cool.

it's really informative for me. Tks bro!!

Thank you brother. I will try to post a more in depth video soon!

Awesome video🫶

thank you from Silicon Valley Startup

Hello user-zn1hm6tk1o Do let me know if you're in need of an intern !

keep em coming brodie!

For sure! :)

excellent. 3 video and 1.89 k subscriber !!! it's amazing, and you are amazing .

Thank you my friend! 💯

Wow. Such a high quality content with great effort. U deserve million subscribers.. Hope soon u ll get popular and make more videos, 🙌

Haha, stay tuned! 🚀🔥

why is the background music soo soothing xD I want to focus not lost in thoughts xD

Haha, next time myfriend. I will turn down the music 🚀

thank u!!

I gotta say your animation is great and the way you explain it is really good. It feels like 3blue 1brown and just as well explained. Keep the good work!

Thank you my friend 🔥

🧡🖤

best

🚀

Love it! Sub+

Great Video, a quick question, does kernel values (in every conv. layer) changes during training of CNN? Does the kernel changes it self so as to only extract exactly the shapes and pattern required for classification ?

Convolutional kernels will train their weights such that they “learn” whatever feature detection is most desirable.

I believe that is a “yes” to your question.

1. Does the kernel values change? It depends. Normally it does, but you can also freeze the network and train for example only the last 3 layers. This is common for transfer learning: th-cam.com/video/FQM13HkEfBk/w-d-xo.html

2. It definetly looks like that is the case if you look at a network post training. Why it extract certain shapes and patterns are still unknown for me.

4:10 How are the outputs of the two convolutions for each filter combied to get 1 filter map / filter?

So, you multiply the values from one filter with the corresponding input layer. Then you add together the outputs + the bias. Then you pass them through an activation funtion (in this case the sigmoid). After this you move the filter one step to the right (since the stride is one) and do the same thing until everything is covered.

This is hard to explain in text. I will do my best to get out an in depth video by this weekend where everything will be explained in detail! 💯

@@far1din I think I get it. Anyway thanks for making these videos, they help a lot.

@@ratfuk9340 th-cam.com/video/jDe5BAsT2-Y/w-d-xo.html

So we use filters to catch the features, and maxpooling to capture the most active feature? Thus if we use maxpooling of higher dimensions, we loose the other less significant features to reduce the model complexity but results in loss of information?

That is the idea and it seems to be working in practice. However, people use average-pooling and min-pooling also. It all comes down to what yields the best result in the end! :D

A lot of this is just trial and error until the best performance is acchieved.

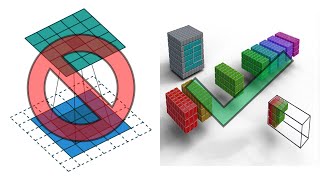

For the convolution, you have two filters through which you pass the original image and you get two images which have been passed by the filter. But for the second convolution, you have 4 filters each for the two images, so a total of 8 filters for the two images. 4 for one and 4 for the other. But in the visualization, dissimilar to the first convolution, you run both the filters through both the images in parallel and then combine them. So instead of getting 8 images from the second convolution, we get 4. Why?

Great question my friend. This is one of the reasons why I felt I had to make this animation.

First of all, in the second convolution, we have one “image” consisting of two channels. You can think of an RGB (colored) image. These images consists of three channels that you stack together. See reference 1 for image. This basically implies that if you have a 28 x 28 pixel RGB image, when you extract the values for the three channels (Red, Green and Blue) you will have three matrices. The dimensions are therefore actually 28 x 28 x 3, where 3 is the number of channels.

Now, when you build a convolutional neural network, you choose how many filters you want in each layer. If you have X amount of channels in the inputted image, each of the filters will have X amount of channels. Examples:

Input = 28 x 28 x 3 and you choose five filters with dimensions 5 x 5, you will technically have five filters that are 5 x 5 x 3. Let’s say you run the convolution. Your outputted activation layer will have the dimensions 24 x 24 x 5. Where 5 is the number of channels.

This implies that if you choose to have a convolutional layer next with two filter with the dimensions 7 x 7, these will actually have the dimensions 7 x 7 x 5.

I hope this made sense and I know it can be a bit confusing. I highly recommend you watch Andrew NG’s video on convolutions over volumes (Reference 2) as he explains the concept very well.

Reference 1: media.geeksforgeeks.org/wp-content/uploads/Pixel.jpg

Reference 2: th-cam.com/video/KTB_OFoAQcc/w-d-xo.html

Great job.. U got a new subscriber 🎉😊

4:24 kind of wild how after this layer there's no resemblance or perceived logic to our eyes how it's characterizing the digit. Can you show maybe 10 examples each of the 10 different digits at this step (just the output)? I wonder if they will look similar in pattern to each other right before the fully connected final layer.

It is very strange how all og this just «works». I will try to do that for one of my next videos! 😊

What do use use for making videos ?

Manim!

www.manim.community

Why there are 2 parts of each filter in layer 2? Is this just for the different color channels?

Yes my friend. If there where four channels that were convoluted over, then each filter would have had four channels as well.

The amount of channels in the filter will mirror that of the input! 💯

I didn't get why in 1st convolution after passing through 5*5 filter image of size 28*28 we've ended up with image of size 24*24. Stride is 1 hence filter should perfectly marsh till the end of the image with no bypassing certain pixels. Probably I miss smth but what?

Brother, for example, let’s say you have an image with the size of 7 x 7 and a filter that is 5 x 5. You start at the left, where the left side of the filter touch the left side of the image. You slide it to the end where the filters **right** side touch the right side of the image. Since the filter is 5 x 5, you can only slide it two times to the right. Essentially, 5 «squares» of the image is already covered when you start, and there is only two more left.

I will highly recommend you draw this out and «scale down» the image to 7 x 7 or something to get some clarification. I’ll also link a video that show the process.

Watch the animations in this video for clarification: th-cam.com/video/tQYZaDn_kSg/w-d-xo.html

@@far1din Bro, I've got it !!! Thank You so much for a great clarification and a link. That helped a lot.

If you start from the last layer with a 1 on the 7 and 0 on the rest then do the reverse process, how would the image look like?

My friend, I do not understand. Could you explain in detail? :)

@@far1din i mean trying to reverse the process from a given output all the way to a generated image and see a visualization of what the network "understands" of a 7 for example. I was seeing some videos of people reversing AI outputs in this way for deep dream and gpt and they found very weird stuff, like for example very weird tokens in GPT that causes the model to completely break

@@figloalds I believe I've seen this tried somewhere and it'll look nothing like a '7'. Basically it can't generate images, you'd just get random gibberish static looking pixels turned on. I also think if you input a smiley face or letter, unless specifically trained for "not a number" scenario, you may get an inconclusive output or falsely confident on one number.

Even though the math of the process checks out, nothing about this whole concept makes any intuitive sense, which is why I find it fascinating tbh lol

@@Leuel48Fan Yes haha. I believe I have seen this been done on a dumbbell. It was very noisy, but you could still see the outer line. However, I was unable to find it now 🤷🏾♀️

@@figloalds Ahaa, yes I know what you are talking about. This is a great idea. This would be what the computer perceives as the ideal «7» or ideal «1». I will try to make a video on that! 🚀

why use 2nd convolution with 8 filters

Hello my friend, the number of filters are typically choosen. A lot of deep learning is trial and error. I used 4 filters in the second convilution and these filters had two channels. Therefore, technically four filters. There is no underlying reason for this as this video was just meant to visualize the process. I could have gone with 10 filters, but the video would have been impossible to render.

hi, how to make 5*5 kernel? just random?

It is initialized randomly if that is what you were asking! :)

How do you create the visualizations

Manim!

docs.manim.community/en/stable/

Then you verify it’s a 7,and the AI algorithm gets better. Does the machine learn what is a 7? or does it learn how to get positive feedback from us. If we use advanced AI to ask advanced questions. Do we actually know for certain, it is not lying to us to get good feedback? Advanced AI like chat gtp 4 which is rapidly advancing will already is much smarter than 99% of humans, potentially is a lying manipulating horrible thing. It’s better to make 100% sure it is truthfull and fully understand intentions before making it smarter and more powerfull, train it woth more data, more computers, ect…

Hello, my friend. Here is my take on this topic. First of all, these kinds of AI models are mathematical models that learn to get the "correct" response. This is called supervised learning, where the model is trained on labelled data. It is hard to define whether or not a model is lying, as you have to define lying. Is it lying if the answers are correct? The concerns are valid, as models can converge towards outputting responses that are not correct but perceived as such. This is something researchers and users should be wary about.

On the other hand, human intelligence and deep neural networks seem to take different paths. Even though deep neural networks are inspired by how the brain works and are capable of many of the "intelligent tasks" we throw at them, the learning process is not the same. Modern deep learning models use backpropagation while humans don't (or at least, that is the perception for now). Although we come to the same answer, the consciousness factor might differ. Therefore, it is hard to tell if a model is lying because lying is something I would like to think of as a conscious act.

You may find it beneficial to watch an interview with Geoffrey Hinton, as he discusses some of the concerns you've raised: th-cam.com/video/qpoRO378qRY/w-d-xo.html

@@far1dinI would argue that humans do use back propagation. When you make a mistake in life, how many times have you looked back to ask yourself what you would have done differently to minimize the negative effects.