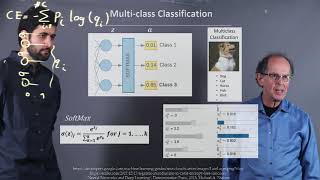

Cross-Entropy Loss Log-likelihood Perspective

ฝัง

- เผยแพร่เมื่อ 9 ก.พ. 2025

- This is a video that covers Cross-Entropy Loss Log-likelihood Perspective

Attribution-NonCommercial-ShareAlike CC BY-NC-SA

Authors: Matthew Yedlin, Mohammad Jafari

Department of Computer and Electrical Engineering, University of British Columbia.

I really like that you guys hand clean the clear board. Feels like back in the classroom + math teather's small math talk!

Great lectures. Thank you Matt Yedlin.

love your video hope to see more of it.

you just cleared my doubt about likelihooh and log likelihood which I had for past yaer and half.

Thank you so much

Well explained using human language.

Hi, thank you so much! I am self lerner, with no much of formal background. Can you please explain how SUM p_i log q_i is entropy, because it does not have minus sign. If it would be log (1/q_i) we would get minus sign out of it but its not. I'm stuck there...

Muhammad is a good student

I want to know how you can write on the mirror and record it.

The camera sees the writing flipped through a mirror.

thanks!

Nice

*(EDIT: Solved it, see comment reply)*

I don't follow how you go from the case of numerical example, where the likelihood is a product of predicted and observed probabilities p_i and q_i each raised to the number of times they occur, to the algebraic expression of the likelihood where you take the product of q_i raised to N * p_i (or is that N_p_i? I'm a little unsure if the p_i is a subscript of the N or multiplied by it).

I worked it out. The answer is to remember that the number of times the outcome i, with probability p_i occurs can be expressed by rearranging the definition p_i = N_p_i / N and substituting this into the expression for the likelihood in the general form that follows from the numerical example:

L = Π q_i ^ N_p_i ,

giving

L = Π q_i ^ N*p_i

Can the old guy.