- 25

- 486 132

Shusen Wang

United States

เข้าร่วมเมื่อ 20 ส.ค. 2020

Staff Engineer @ Meta

RL-1G: Summary

This lecture is a summary of Reinforcement Learning Basics.

Slides: github.com/wangshusen/DRL.git

Slides: github.com/wangshusen/DRL.git

มุมมอง: 1 501

วีดีโอ

RL-1F: Evaluate Reinforcement Learning

มุมมอง 1.2K3 ปีที่แล้ว

Next video: th-cam.com/video/DLO401mNOw4/w-d-xo.html If you want to empirically compare two reinforcement learning algorithms, you will use OpenAI Gym. This lecture introduces three kinds of problems: - Classical control problems include CartPole and Pendulum. - Atari games include Pong, Space Invader, and Breakout. - MuJoCo includes Ant, Humanoid, and Half Cheetah. Slides: github.com/wangshuse...

RL-1E: Value Functions

มุมมอง 3.4K3 ปีที่แล้ว

Next video: th-cam.com/video/Rv7uC9v6Eco/w-d-xo.html Value functions are the expectations of the return. Action-value function Q evaluates how good it is to take action A while being in state S. State-value function V evaluates how good state S is. Slides: github.com/wangshusen/DRL.git

RL-1D: Rewards and Returns

มุมมอง 1.6K3 ปีที่แล้ว

Next video: th-cam.com/video/lI8_p7Qeuto/w-d-xo.html Return is also known as cumulative future rewards. Return is defined as the sum of all the future rewards. Discounted return means giving rewards in the far future small weights. Slides: github.com/wangshusen/DRL.git

RL-1C: Randomness in MDP, Agent-Environment Interaction

มุมมอง 1.4K3 ปีที่แล้ว

Next Video: th-cam.com/video/MeoSqrV5a24/w-d-xo.html Markov decision process (MDP) has two sources of randomness: - The action is randomly sampled from the policy function. - The next state is randomly sampled from the state-transition function. The agent can interact with the environment. Observing the current state, the agent executes an action. Then the environment updates the state and prov...

RL-1B: State, Action, Reward, Policy, State Transition

มุมมอง 3.5K3 ปีที่แล้ว

Next Video: th-cam.com/video/0VWBr6dBMGY/w-d-xo.html This lecture introduces the basic concepts of reinforcement learning, including state, action, reward, policy, and state transition. Slides: github.com/wangshusen/DRL.git

RL-1A: Random Variables, Observations, Random Sampling

มุมมอง 3.2K3 ปีที่แล้ว

Next Video: th-cam.com/video/GFayVUt2WGE/w-d-xo.html This is the first lecture on deep reinforcement learning. This lecture introduces basic probability theories that will be used in reinforcement learning. The topics include random variables, observed values, probability density function (PDF), probability mass function (PMF), expectation, and random sampling. Slides: github.com/wangshusen/DRL...

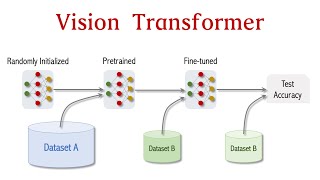

Vision Transformer for Image Classification

มุมมอง 122K3 ปีที่แล้ว

Vision Transformer (ViT) is the new state-of-the-art for image classification. ViT was posted on arXiv in Oct 2020 and officially published in 2021. On all the public datasets, ViT beats the best ResNet by a small margin, provided that ViT has been pretrained on a sufficiently large dataset. The bigger the dataset, the greater the advantage of the ViT over ResNet. Slides: github.com/wangshusen/...

BERT for pretraining Transformers

มุมมอง 13K3 ปีที่แล้ว

Next Video: th-cam.com/video/HZ4j_U3FC94/w-d-xo.html Bidirectional Encoder Representations from Transformers (BERT) is for pretraining the Transformer models. BERT does not need manually labeled data. BERT can use any books and web documents to automatically generate training data. Slides: github.com/wangshusen/DeepLearning Reference: Devlin, Chang, Lee, and Toutanova. BERT: Pre-training of dee...

Transformer Model (2/2): Build a Deep Neural Network (1.25x speed recommended)

มุมมอง 13K3 ปีที่แล้ว

Next Video: th-cam.com/video/EOmd5sUUA_A/w-d-xo.html The Transformer models are state-of-the-art language models. They are based on attention and dense layer without RNN. In the previous lecture, we have built the attention layer and self-attention layer. In this lecture, we first build multi-head attention layers and then use them to build a deep neural network known as Transformer. Transforme...

Transformer Model (1/2): Attention Layers

มุมมอง 28K3 ปีที่แล้ว

Next Video: th-cam.com/video/J4H6A4-dvhE/w-d-xo.html The Transformer models are state-of-the-art language models. They are based on attention and dense layers without RNN. Instead of studying every module of Transformer, let us try to build a Transformer model from scratch. In this lecture, we eliminate RNNs while keeping attentions. We will get an attention layer and a self-attention layer. In...

Self-Attenion for RNN (1.25x speed recommended)

มุมมอง 8K3 ปีที่แล้ว

Next Video: th-cam.com/video/FC8PziPmxnQ/w-d-xo.html The original attention was applied to only Seq2Seq models. But attention is not limited to Seq2Seq. When applied to a single RNN, attention is known as self-attention. This lecture teaches self-attention for RNN. In the original paper of Cheng et al. 2016, attention was applied to LSTM. To make self-attention easier to understand, this lectur...

Attention for RNN Seq2Seq Models (1.25x speed recommended)

มุมมอง 31K3 ปีที่แล้ว

Next Video: th-cam.com/video/06r6kp7ujCA/w-d-xo.html Attention was originally proposed by Bahdanau et al. in 2015. Later on, attention finds much broader applications in NLP and computer vision. This lecture introduces only attention for RNN sequence-to-sequence models. The audience is assumed to know RNN sequence-to-sequence models before watching this video. Slides: github.com/wangshusen/Deep...

Few-Shot Learning (3/3): Pretraining + Fine-tuning

มุมมอง 30K3 ปีที่แล้ว

This lecture introduces pretraining and fine-tuning for few-shot learning. This method is simple but comparable to the state-of-the-art. This lecture discusses 3 tricks for improving fine-tuning: (1) a good initialization, (2) entropy regularization, and (3) combine cosine similarity and softmax classifier. Sides: github.com/wangshusen/DeepLearning Lectures on few-shot learning: 1. Basic concep...

Few-Shot Learning (2/3): Siamese Networks

มุมมอง 57K3 ปีที่แล้ว

Next Video: th-cam.com/video/U6uFOIURcD0/w-d-xo.html This lecture introduces the Siamese network. It can find similarities or distances in the feature space and thereby solve few-shot learning. Sides: github.com/wangshusen/DeepLearning Lectures on few-shot learning: 1. Basic concepts: th-cam.com/video/hE7eGew4eeg/w-d-xo.html 2. Siamese networks: th-cam.com/video/4S-XDefSjTM/w-d-xo.html 3. Pretr...

17-4: Random Shuffle & Fisher-Yates Algorithm

มุมมอง 2.2K3 ปีที่แล้ว

17-4: Random Shuffle & Fisher-Yates Algorithm

5-2: Dense Matrices: row-major order, column-major order

มุมมอง 4.6K4 ปีที่แล้ว

5-2: Dense Matrices: row-major order, column-major order

5-1: Matrix basics: additions, multiplications, time complexity analysis

มุมมอง 4.2K4 ปีที่แล้ว

5-1: Matrix basics: additions, multiplications, time complexity analysis

Few-Shot Learning (1/3): Basic Concepts

มุมมอง 78K4 ปีที่แล้ว

Few-Shot Learning (1/3): Basic Concepts

2-1: Array, Vector, and List: Comparisons

มุมมอง 4.3K4 ปีที่แล้ว

2-1: Array, Vector, and List: Comparisons

Wonderful explaination

I am very enlightened.. thankyou

Clear and good explanation, good lecture, thanks

extremely clear and easy to follow explanatiom

Great explanation 👏

በጥሩ ሁኔታ አብራርተህልናል፣ በጣም እናመሰግናለን

Great explanation

Excellent and outstanding.... Thank you so much. Please add Time complexities for all operations

What does it mean when the gradient propagates back to the CNN as well? What is changed in the CNN?

I think I need to relook CNN parameters!

Thank you for this video. It's awesome

nice

Which app do you use to make presentations? How do you hide some images/arrows in the slides like an animation? Thanks.

Are the siamese networks not performing a fine-tunning? when the model weights are learned to perform the task?

This was very clear, thank you!

What an explanation in detail,loved the way you explain things , thank you very much sir.

Great Explanation.Thanqu

Wonderful explanation!👏

This is hands down the best explanation of Siamese networks on TH-cam

How data A is trained? I mean what is the loss function? Is it only using encoder or both e/decoder?

Its 2024 please stop using a potato as a microphone

Thank you so much for this series of lectures and slides. I am doing a thesis on few-shot learning and this has really helped me understand the fundamentals of this algorithm.

Absolutely gorgeous! Thank you so much!

The class token 0 is in the embed dim, does that mean we should add a linear layer from embed to number of classes before the softmax for the classification?

12:51 Just had to say that your support set image of the two hamsters aren’t hamsters. Those are guinea pigs.

this is supposed to be english?

I feel like autoencoder can be used for the classification task and might work better. Because autoencoder can map the input into a latent space which captures the patterns.

please explain deletion when you have time, especially on how to memorize the pointers along the search path

very clear explanation, professor

nice explanation. thanks

Best lecture about Few-shot learning! Thank you

Thank you so much! Great explanation

great expalation! Good for you! Don't stop giving ML guides!

so the training set is much bigger than the support set ? and i only use the support set to help with the classification of query images ?

Is there any implementation of this architecture bro??,I can't find.

Best Explanation of skiplist

Softmax associates while learning, and identifies while inference

Clear, concise, and overall easy to understand for a newbie like me. Thanks!

very clear nice

Thank you!

Thank you. I like the explanation

Awesome. Well explained. Well simplified.

Best Video on this topic so far!

at 19:26 the number of weights should be m*t+1 or am i getting it wrong ? because we have c0 as well

This is an excellent presentation

이해가 잘됩니다. 감사합니다.

The best video so far. The animation is easy to follow and the explaination is very straight forward.

The best lecture about transformers that I've seen 🙏🏻🙏🏻🙏🏻🙏🏻🙏🏻

Very good explanations thank you very much

Thank you, very explicit explanation. 讲的太好了老师!感谢!

That was great and helpful 🤌🏻