- 55

- 234 011

Leslie Myint

เข้าร่วมเมื่อ 13 เม.ย. 2013

วีดีโอ

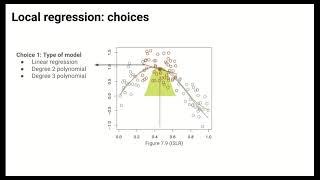

Local Regression and Generalized Additive Models

มุมมอง 16K3 ปีที่แล้ว

Local Regression and Generalized Additive Models

KNN Regression and the Bias-Variance Tradeoff

มุมมอง 4.3K3 ปีที่แล้ว

KNN Regression and the Bias-Variance Tradeoff

Modeling Nonlinearity: Polynomial Regression and Splines

มุมมอง 12K3 ปีที่แล้ว

Modeling Nonlinearity: Polynomial Regression and Splines

Sensitivity Analyses for Unmeasured Variables

มุมมอง 9K4 ปีที่แล้ว

Sensitivity Analyses for Unmeasured Variables

Estimating Causal Effects: Inverse Probability Weighting

มุมมอง 18K4 ปีที่แล้ว

Estimating Causal Effects: Inverse Probability Weighting

Asking Clear Questions and Building Graphs

มุมมอง 1.3K4 ปีที่แล้ว

Asking Clear Questions and Building Graphs

thank you very much for the good lecture!

Thank you this channel is carrying my exam 🫶

very good

so gooooood!!

Great video - thank you

Thank you very much for the wonderful explanation. I have one question regarding IPW. What are the advantages and disadvantages of IPW compared to regression? Thank you

I am often wondering why you stopped making these incredibly well-explained videos. Thanks!

Leslie's explanations are crystal clear and balanced. Awesome resource!

great explanation, thank you!

Thank

Leslie.. muy buen material.. pregunta: que debere hacer si cuento con un conjunto de datos grande en variables (o hasta cuantas variables es computacionalmente aceptable) y influyen la cantidad de observaciones? que metodo debo seguir si es incapaz de trabajar con las computadoras ..

the best video i have watched on statistical ml

One of the most significant benefits of local regression is that it allows you to easily estimate a regularized derivative. It's practically the best method for differentiating any measurements.

thanks mam

Thank you so much for this content

Awesome

Very helpful, one of the best on bagging and random forests I have seen.

Thank you for the simple explanation. I was stuck in a research paper with a causal graph diagram which did not include much information. This video saved my day <3

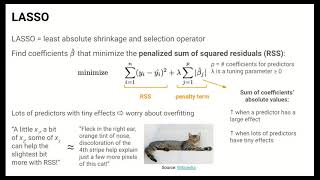

Hui superb lecture.. but I have one doubt I hope u will clear it. All of the videos related to lasso used regression model to explain it.. since it’s also a feature selection so can we use this as a feature selection for classification problem?? As PCA is used for both regression and classification. And moreover classifications means related to categorical data which by default convert into numerical values. So can we use lasso for classification problem also as a feature selection,if yes then why there is not any example of it? Thanx in advance

at 9:45 , when you divided data into two strata by education, are the graphs correct? when you select low education strata, plot Y(a=1) and Y(a=0) (treatment and no treatment), still you cannot observe contra factual, how come you have double line graph again in both cases Ya=1 and Ya=0? How are you observing both effects (doubly line graph) within both treatment and control group? Was that a hypothetical graph?

This way truly informative! Thanks!

Amazing explanation. Thank you

Great explanation 👌

Perfect

You are a goddess Leslie... your voice... your intellect <3

Finally I get this stupid topic...

these videos are all extremely well done. clean recordings, good explanations, thanks for your efforts!!

great explantion! the only thing I do not understand why it is called "natural" xxxx effect? thx!

great illustration of Verma and Pearl's inductive causation algorithm. Spirtes et al. was the first practical application of the algorithm. this video explains things better than reading a book for an hour.

is there any cutoff, of how much the associations are called strong or not? to qualitatively change results.

thx for teaching well

Very cool, thank you!

7:56 Hey, I recognize that. It's Sugeno fuzzy-logic! Makes sense, since we are talking about inference. I been wondering how one could mix graphs and fuzzy logic, but wasn't exactly looking for it. What a nice surprise.

Hello miss, great video, but just one thing. How do we assume that the potential outcomes are independent of the treatment? Isn't this a bit counterintuitive? If we want to measure the causal effects between the treatment and the outcome then why are we assuming them to be independent? Thanks again for the video.

Please share codes in desciption box

great

Are you by any chance Burmese? I am just curious. Thank you for the video.

Great video! Thank you very much!

Very easy to understand. Thank you!

well-explained!

This is the best expalnation for ignorability I have ever heard!

are there any code examples with this tutorial?

so you cannot have a collider in DAG? a collider is said her to be undirected...

Awesome explanation!

very well explained! good work!

Why do we need the penalty term? can we not just have the RSS without it?

Yes, we can just have RSS but just RSS can lead to an overfit model when we have lots of predictors (some of which are likely uninformative in predicting the outcome). The penalty term encourages predictors that help little to not at all be eliminated from the model.

Thank you so much for such an amazing explanation!

The best explanation I heard for IPW estimator!

beutiful slides, and vey clear also. Thank u <3

This was really helpful, thanks a lot!