- 29

- 163 683

MLSS Iceland 2014

เข้าร่วมเมื่อ 17 พ.ย. 2014

Kernel methods and computational biology -- Jean-Philippe Vert (Part 1)

Kernel methods and computational biology -- Jean-Philippe Vert (Part 1)

มุมมอง: 3 408

วีดีโอ

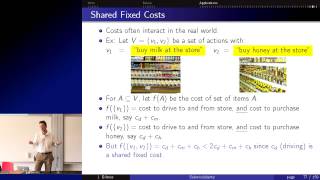

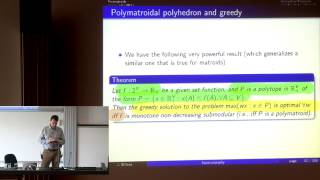

Submodularity and Optimization -- Jeff Bilmes (Part 2)

มุมมอง 1.5K9 ปีที่แล้ว

Submodularity and Optimization Jeff Bilmes (Part 2)

Bayesian Uncertainty Quantification for Differential Equations -- Mark Girolami (Part 2)

มุมมอง 1.5K9 ปีที่แล้ว

Bayesian Uncertainty Quantification for Differential Equations Mark Girolami (Part 2)

Bayesian Uncertainty Quantification for Differential Equations -- Mark Girolami (Part 1)

มุมมอง 3.3K9 ปีที่แล้ว

Bayesian Uncertainty Quantification for Differential Equations Mark Girolami (Part 1)

Submodularity and Optimization -- Jeff Bilmes (Part 3)

มุมมอง 1K9 ปีที่แล้ว

Submodularity and Optimization Jeff Bilmes (Part 3)

Hamiltonian Monte Carlo and Stan -- Michael Betancourt (Part 2)

มุมมอง 16K9 ปีที่แล้ว

Hamiltonian Monte Carlo and Stan Michael Betancourt (Part 2)

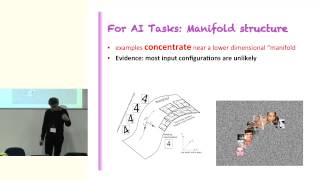

Introduction to Machine Learning -- Neil Lawrence (Part 1)

มุมมอง 4.6K9 ปีที่แล้ว

Introduction to Machine Learning Neil Lawrence (Part 1)

Probabilistic Modelling -- Iain Murray (Part 2)

มุมมอง 2.1K9 ปีที่แล้ว

Probabilistic Modelling Iain Murray (Part 2)

Robust Inference -- Chris Holmes (Part 2)

มุมมอง 4989 ปีที่แล้ว

Robust Inference Chris Holmes (Part 2)

Big Data and Large Scale Inference -- Amr Ahmed (Part 2)

มุมมอง 4379 ปีที่แล้ว

Big Data and Large Scale Inference Amr Ahmed (Part 2)

Theoretical Issues in Statistical Learning -- Timo Koski (Part 2)

มุมมอง 4589 ปีที่แล้ว

Theoretical Issues in Statistical Learning Timo Koski (Part 2)

Submodularity and Optimization -- Jeff Bilmes (Part 1)

มุมมอง 3.8K9 ปีที่แล้ว

Submodularity and Optimization Jeff Bilmes (Part 1)

Probabilistic Programming and Bayesian Nonparametrics -- Frank Wood (Part 3)

มุมมอง 7929 ปีที่แล้ว

Probabilistic Programming and Bayesian Nonparametrics Frank Wood (Part 3)

Probabilistic Modelling -- Iain Murray (Part 3)

มุมมอง 1.8K9 ปีที่แล้ว

Probabilistic Modelling Iain Murray (Part 3)

Probabilistic Programming and Bayesian Nonparametrics -- Frank Wood (Part 2)

มุมมอง 1.8K9 ปีที่แล้ว

Probabilistic Programming and Bayesian Nonparametrics Frank Wood (Part 2)

Kernel methods and computational biology -- Jean-Philippe Vert (Part 2)

มุมมอง 1.3K9 ปีที่แล้ว

Kernel methods and computational biology Jean-Philippe Vert (Part 2)

Probabilistic Programming and Bayesian Nonparametrics -- Frank Wood (Part 1)

มุมมอง 7K9 ปีที่แล้ว

Probabilistic Programming and Bayesian Nonparametrics Frank Wood (Part 1)

What is Machine Learning: A Probabilistic Perspective -- Neil Lawrence (Part 2)

มุมมอง 3K9 ปีที่แล้ว

What is Machine Learning: A Probabilistic Perspective Neil Lawrence (Part 2)

Probabilistic Modelling -- Iain Murray (Part 1)

มุมมอง 5K9 ปีที่แล้ว

Probabilistic Modelling Iain Murray (Part 1)

Efficient Bayesian inference with Hamiltonian Monte Carlo -- Michael Betancourt (Part 1)

มุมมอง 44K9 ปีที่แล้ว

Efficient Bayesian inference with Hamiltonian Monte Carlo Michael Betancourt (Part 1)

Robust Inference -- Chris Holmes (Part 1)

มุมมอง 1K9 ปีที่แล้ว

Robust Inference Chris Holmes (Part 1)

Theoretical Issues in Statistical Learning -- Timo Koski (Part 1)

มุมมอง 6629 ปีที่แล้ว

Theoretical Issues in Statistical Learning Timo Koski (Part 1)

Big Data and Large Scale Inference -- Amr Ahmed (Part 1)

มุมมอง 1.1K9 ปีที่แล้ว

Big Data and Large Scale Inference Amr Ahmed (Part 1)

Kernel methods and computational biology -- Jean-Philippe Vert (Part 3)

มุมมอง 1.1K9 ปีที่แล้ว

Kernel methods and computational biology Jean-Philippe Vert (Part 3)

Probabilistic Modeling and Inference at Scale -- Ralf Herbrich (Part 2)

มุมมอง 4489 ปีที่แล้ว

Probabilistic Modeling and Inference at Scale Ralf Herbrich (Part 2)

Probabilistic Modeling and Inference at Scale -- Ralf Herbrich (Part 1)

มุมมอง 9299 ปีที่แล้ว

Probabilistic Modeling and Inference at Scale Ralf Herbrich (Part 1)

The performance of the card game makes people *realise that* the cards aren't symmetric, ie., that there's a face the audience is only allowed to see "the second face", and that the lecture necessarily chooses a card whose second face is black (to make the slides make any sense). The problem the lecturer **performed** was, "I am looking at three cards. These are oriented so that both faces are visible to me as WW, WB, BB. I choose a card so that I guarantee the second face is black. What's the probability that the first face is white?" The answer is 1/2. These kinds of "gotcha" examples always annoy me, since invariably, its the person posing the question who has no idea how profoundly irrational people like mathematicians understand problems vs. profoundly rational people like non-mathematicians. This then leads the i-am-smarter presenter to (implicitly) admonish the stupidity of people, etc. when the reality is that people's rational facilities are working exactly correctly. Animals could not survive for very long if the basics of probability were not absolutely obvious and intuitive; and are vastly too rational to pay attention to some nonsense on a slide at a plainly rigged performance. The performance should be, "There's a box of cards, each very simple, plain, and constructed the same way. 1/3 of the cards are white on both sides; 1/3 are black on both sides; and 1/3 have a black face and a white face. I close my eyes and pull out a card at random and hold it up to my face. I notice the side facing me is black. Which card is most likely in my hand, the white-white, whiteblack, or black black? What's the probability?" Then the generative reasoning is very obvious: I didnt draw the white-white; of the two options left, i could've draw then black-white card and see the white face first; etc. Intuitively, the black-black card is the safest single-card bet.

what does the "r" mean? what is the radial component? any reference?

Intro to HMC 58:20

14:45 - there's an error in the denominator. You should integrate all the other variables, except theta_1.

yes, that one threw me off.

To “simplify” the card problem, imagine the cards are random variables with two possible states, w or b. One type is always in state b, the other is 50% state a, 50% state b. If we observe a variable in state b, what’s the chance it’s the first type that never changes away from b vs the type half in state b half in state w. Then it’s obvious if we observe something in a black state that it makes it more likely to be black all the time vs white half the time. I dislike that the white-white card exists in this example at all because it misleads you as to the source of the 3 in the answer and complicates the example

watching this in 2020: everytime he coughed I feel alarmed lol

Please disregard the comment by HUY Nguyen, very interesting lecture

Is there any place where I can get the slides?

Great talk

this guy is awesome.

This is really good stuff

-What does coordinate parametrization invariant means? -can someone explain in 12:21 why does mass get's shifted? -What does the mass describe mathematically? d\theta pi(\theta) or the cdf inside the radius r?

nice pudding on that belly soyboy lol

what?

Very useful, thanks a million! In eq40 , should the first term in the sum has the subscript of i rather than k ?

I find the number of likes surprisingly low for such an awesome prefect lecture... it is great thanks for uploading

www.stat.columbia.edu/~gelman/bda.course/naes04.csv "Be careful - as we’ve learned how to better diagnose HMC fits we’ve discovered that this model didn’t fit as well as originally thought. In particular the hyperparameters were pretty poorly identified which makes complete sampling difficult." - Michael Betancourt via stan-users google group

To give another intuition on why the probability of the reverse being white is 1/3. You can imagine the generative process differently, instead of drawing a card, then drawing a face, you instead draw a face from a pool of 6 faces. Clearly out of these six faces 3 are white, 2 are black with a reverse that is also black, and 1 is black with a reverse that is white. It follows that given you drew a black face there is a 1/3 chance that the reverse is white.

Is there a new link for the dataset?

Yes, please back bring the example code and data!

At 35:54 it seems that there exists a typo in the third point. I should be "no less than" rather than "no more than". Since in submodularity the larger the set the less the marginal benefit it gains.

Thus far I've only read about Leapfrog integration being used here. Would there be any kind of advantage or disadvantage in using other symplectic integration schemes instead? Symplectic Euler is the simplest possible such algorithm. Velocity Verlet is basically Leapfrog except it keeps positions and momenta in lockstep. And there are higher order versions of that same algorithm* which would normally allow for a greater stepsize. - The question is, do such advantages (compared to the disadvantages of perhaps having to spend a greater computational effort per integration step) carry over to HMC or is Leapfrog really _the_ thing to do here? * see en.wikipedia.org/wiki/Symplectic_integrator#A_fourth-order_example

Yes, the exact dynamics is volume preserving, but only approximating it is not OK. In any case, the question was asking to use other symplectic integrators, which *are* volume preserving by definition.

One of the best lectures on the basics!!

Stan is Turing-complete, at least according to the Stan reference.

It would have been great if we could have seen the laser on the slides. It is kinda confusing where he is pointing to now :/

"400 million currency units"? Isn't that a very specific value for such an unspecific currency? :P

The first thing that struck me was the super cool beard. The second thing was the super cool accent. What is that accent? Is it some kind of Scottish?

The historical nonsense is irrelevant. couldn't keep watching after 20 minutes

Surely not! It is often very importaint to undrstand the intuition and background to a problem

Very interesting and useful lecture. Just a comment, the initial steps in a MCMC were called "Burn in" to put something into a place, not "burning" as to put something in fire, This also confounded when setting up computers with their firmware and software. ... Thank you for the lecture it has helped me a lot

great intro to graphical models

1:19:20 If more highly curved space sucks up more energy, could this be an explanation for dark matter? 1:17:39 This looks like an illustration of inflation theory. The mode being negative seems to indicate the presence of dark energy. Whereas if the mode was positive, the universe would contract.

InfiniteUniverse88 Ha ha, quite subjective obvervations, doctor Watson...

What happens when future observations (typically at the end of program execution) strongly condition the state at an earlier time? The mass will disappear and the variance will be huge. To put it another way, the filtering distribution and the smoothing distribution may not overall well at all. Then what?

really good summary of MCMC

Koski for president!